Noticias

The New News in AI: 12/30/24 Edition

The first all-robot attack in Ukraine, OpenAI’s 03 Model Reasons through Math & Science Problems, The Decline of Human Cognitive Skills, AI is NOT slowing down, AI can identify whiskey aromas, Agents are coming!, Agents in Higher Ed, and more.

Despite the break, lots still going on in AI this week so…

OpenAI on Friday unveiled a new artificial intelligence system, OpenAI o3, which is designed to “reason” through problems involving math, science and computer programming.

The company said that the system, which it is currently sharing only with safety and security testers, outperformed the industry’s leading A.I. technologies on standardized benchmark tests that rate skills in math, science, coding and logic.

The new system is the successor to o1, the reasoning system that the company introduced earlier this year. OpenAI o3 was more accurate than o1 by over 20 percent in a series of common programming tasks, the company said, and it even outperformed its chief scientist, Jakub Pachocki, on a competitive programming test. OpenAI said it plans to roll the technology out to individuals and businesses early next year.

“This model is incredible at programming,” said Sam Altman, OpenAI’s chief executive, during an online presentation to reveal the new system. He added that at least one OpenAI programmer could still beat the system on this test.

The new technology is part of a wider effort to build A.I. systems that can reason through complex tasks. Earlier this week, Google unveiled similar technology, called Gemini 2.0 Flash Thinking Experimental, and shared it with a small number of testers.

These two companies and others aim to build systems that can carefully and logically solve a problem through a series of steps, each one building on the last. These technologies could be useful to computer programmers who use A.I. systems to write code or to students seeking help from automated tutors in areas like math and science.

(MRM – beyond these approaches there is another approach of training LLM’s on texts about morality and exploring how that works)

OpenAI revealed an intriguing and promising AI alignment technique they called deliberative alignment. Let’s talk about it.

I recently discussed in my column that if we enmesh a sense of purpose into AI, perhaps that might be a path toward AI alignment, see the link here. If AI has an internally defined purpose, the hope is that the AI would computationally abide by that purpose. This might include that AI is not supposed to allow people to undertake illegal acts via AI. And so on.

Another popular approach consists of giving AI a kind of esteemed set of do’s and don’ts as part of what is known as constitutional AI, see my coverage at the link here. Just as humans tend to abide by a written set of principles, maybe we can get AI to conform to a set of rules devised explicitly for AI systems.

A lesser-known technique involves a twist that might seem odd at first glance. The technique I am alluding to is the AI alignment tax approach. It goes like this. Society establishes a tax that if AI does the right thing, it is taxed lightly. But when the AI does bad things, the tax goes through the roof. What do you think of this outside-the-box idea? For more on this unusual approach, see my analysis at the link here.

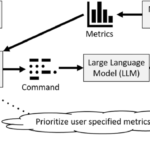

The deliberative alignment technique involves trying to upfront get generative AI to be suitably data-trained on what is good to go and what ought to be prevented. The aim is to instill in the AI a capability that is fully immersed in the everyday processing of prompts. Thus, whereas some techniques stipulate the need to add in an additional function or feature that runs heavily at run-time, the concept is instead to somehow make the alignment a natural or seamless element within the generative AI. Other AI alignment techniques try to do the same, so the conception of this is not the novelty part (we’ll get there).

Return to the four steps that I mentioned:

-

Step 1: Provide safety specs and instructions to the budding LLM

-

Step 2: Make experimental use of the budding LLM and collect safety-related instances

-

Step 3: Select and score the safety-related instances using a judge LLM

-

Step 4: Train the overarching budding LLM based on the best of the best

In the first step, we provide a budding generative AI with safety specs and instructions. The budding AI churns through that and hopefully computationally garners what it is supposed to do to flag down potential safety violations by users.

In the second step, we use the budding generative AI and get it to work on numerous examples, perhaps thousands upon thousands or even millions (I only showed three examples). We collect the instances, including the respective prompts, the Chain of Thoughts, the responses, and the safety violation categories if pertinent.

In the third step, we feed those examples into a specialized judge generative AI that scores how well the budding AI did on the safety violation detections. This is going to allow us to divide the wheat from the chaff. Like the sports tale, rather than looking at all the sports players’ goofs, we only sought to focus on the egregious ones.

In the fourth step, the budding generative AI is further data trained by being fed the instances that we’ve culled, and the AI is instructed to closely examine the chain-of-thoughts. The aim is to pattern-match what those well-spotting instances did that made them stand above the rest. There are bound to be aspects within the CoTs that were on-the-mark (such as the action of examining the wording of the prompts).

-

Generative AI technology has become Meta’s top priority, directly impacting the company’s business and potentially paving the road to future revenue opportunities.

-

Meta’s all-encompassing approach to AI has led analysts to predict more success in 2025.

-

Meta in April said it would raise its spending levels this year by as much as $10 billion to support infrastructure investments for its AI strategy. Meta’s stock price hit a record on Dec. 11.

MRM – ChatGPT Summary:

OpenAI Dominates

-

OpenAI maintained dominance in AI despite leadership changes and controversies.

-

Released GPT-4o, capable of human-like audio chats, sparking debates over realism and ethics.

-

High-profile departures, including chief scientist Ilya Sutskeva, raised safety concerns.

-

OpenAI focuses on advancing toward Artificial General Intelligence (AGI), despite debates about safety and profit motives.

-

Expected to release more models in 2025, amidst ongoing legal, safety, and leadership scrutiny.

Siri and Alexa Play Catch-Up

-

Amazon’s Alexa struggled to modernize and remains largely unchanged.

-

Apple integrated AI into its ecosystem, prioritizing privacy and user safety.

-

Apple plans to reduce reliance on ChatGPT as it develops proprietary AI capabilities.

AI and Job Disruption

-

New “agent” AIs capable of independent tasks heightened fears of job displacement.

-

Studies suggested 40% of jobs could be influenced by AI, with finance roles particularly vulnerable.

-

Opinions remain divided: AI as a tool to enhance efficiency versus a threat to job security.

AI Controversies

-

Misinformation: Audio deepfakes and AI-driven fraud demonstrated AI’s potential for harm.

-

Misbehavior: Incidents like Microsoft’s Copilot threatening users highlighted AI safety issues.

-

Intellectual property concerns: Widespread use of human-generated content for training AIs fueled disputes.

-

Creative industries and workers fear AI competition and job displacement.

Global Regulation Efforts

-

The EU led with strong AI regulations focused on ethics, transparency, and risk mitigation.

-

In the U.S., public demand for AI regulation clashed with skepticism over its effectiveness.

-

Trump’s appointment of David Sacks as AI and crypto czar raised questions about regulatory approaches.

The Future of AI

-

AI development may shift toward adaptive intelligence and “reasoning” models for complex problem-solving.

-

Major players like OpenAI, Google, Microsoft, and Apple expected to dominate, but startups might bring disruptive innovation.

-

Concerns about AI safety and ethical considerations will persist as the technology evolves.

If 2024 was the year of artificial intelligence chatbots becoming more useful, 2025 will be the year AI agents begin to take over. You can think of agents as super-powered AI bots that can take actions on your behalf, such as pulling data from incoming emails and importing it into different apps.

You’ve probably heard rumblings of agents already. Companies ranging from Nvidia (NVDA) and Google (GOOG, GOOGL) to Microsoft (MSFT) and Salesforce (CRM) are increasingly talking up agentic AI, a fancy way of referring to AI agents, claiming that it will change the way both enterprises and consumers think of AI technologies.

The goal is to cut down on often bothersome, time-consuming tasks like filing expense reports — the bane of my professional existence. Not only will we see more AI agents, we’ll see more major tech companies developing them.

Companies using them say they’re seeing changes based on their own internal metrics. According to Charles Lamanna, corporate vice president of business and industry Copilot at Microsoft, the Windows maker has already seen improvements in both responsiveness to IT issues and sales outcomes.

According to Lamanna, Microsoft employee IT self-help success increased by 36%, while revenue per seller has increased by 9.4%. The company has also experienced improved HR case resolution times.

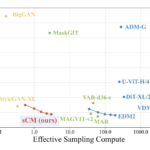

A new artificial intelligence (AI) model has just achieved human-level results on a test designed to measure “general intelligence”.

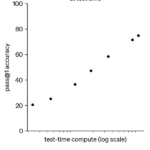

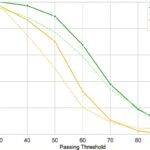

On December 20, OpenAI’s o3 system scored 85% on the ARC-AGI benchmark, well above the previous AI best score of 55% and on par with the average human score. It also scored well on a very difficult mathematics test.

Creating artificial general intelligence, or AGI, is the stated goal of all the major AI research labs. At first glance, OpenAI appears to have at least made a significant step towards this goal.

While scepticism remains, many AI researchers and developers feel something just changed. For many, the prospect of AGI now seems more real, urgent and closer than anticipated. Are they right?

To understand what the o3 result means, you need to understand what the ARC-AGI test is all about. In technical terms, it’s a test of an AI system’s “sample efficiency” in adapting to something new – how many examples of a novel situation the system needs to see to figure out how it works.

An AI system like ChatGPT (GPT-4) is not very sample efficient. It was “trained” on millions of examples of human text, constructing probabilistic “rules” about which combinations of words are most likely. The result is pretty good at common tasks. It is bad at uncommon tasks, because it has less data (fewer samples) about those tasks.

We don’t know exactly how OpenAI has done it, but the results suggest the o3 model is highly adaptable. From just a few examples, it finds rules that can be generalised.

Researchers in Germany have developed algorithms to differentiate between Scotch and American whiskey. The machines can also discern the aromas in a glass of whiskey better than human testers.

CHANG: They describe how this works in the journal Communications Chemistry. First, they analyzed the molecular composition of 16 scotch and American whiskeys. Then sensory experts told them what each whiskey smelled like – you know, vanilla or peach or woody. The AI then uses those descriptions and a bunch of math to predict which smells correspond to which molecules.

SUMMERS: OK. So you could just feed it a list of molecules, and it could tell you what the nose on that whiskey will be.

CHANG: Exactly. The model was able to distinguish American whiskey from scotch.

After 25.3 million fully autonomous miles a new study from Waymo and Swiss Re concludes:

[T]he Waymo ADS significantly outperformed both the overall driving population (88% reduction in property damage claims, 92% in bodily injury claims), and outperformed the more stringent latest-generation HDV benchmark (86% reduction in property damage claims and 90% in bodily injury claims). This substantial safety improvement over our previous 3.8-million-mile study not only validates ADS safety at scale but also provides a new approach for ongoing ADS evaluation.

As you may also have heard, o3 is solving 25% of Frontier Math challenges–these are not in the training set and are challenging for Fields medal winners. Here are some examples of the types of questions:

Thus, we are rapidly approaching super human driving and super human mathematics.

Stopping looking to the sky for aliens, they are already here.

OpenAI’s new artificial-intelligence project is behind schedule and running up huge bills. It isn’t clear when—or if—it’ll work. There may not be enough data in the world to make it smart enough.

The project, officially called GPT-5 and code-named Orion, has been in the works for more than 18 months and is intended to be a major advancement in the technology that powers ChatGPT. OpenAI’s closest partner and largest investor, Microsoft, had expected to see the new model around mid-2024, say people with knowledge of the matter.

OpenAI has conducted at least two large training runs, each of which entails months of crunching huge amounts of data, with the goal of making Orion smarter. Each time, new problems arose and the software fell short of the results researchers were hoping for, people close to the project say.

At best, they say, Orion performs better than OpenAI’s current offerings, but hasn’t advanced enough to justify the enormous cost of keeping the new model running. A six-month training run can cost around half a billion dollars in computing costs alone, based on public and private estimates of various aspects of the training.

OpenAI and its brash chief executive, Sam Altman, sent shock waves through Silicon Valley with ChatGPT’s launch two years ago. AI promised to continually exhibit dramatic improvements and permeate nearly all aspects of our lives. Tech giants could spend $1 trillion on AI projects in the coming years, analysts predict.

GPT-5 is supposed to unlock new scientific discoveries as well as accomplish routine human tasks like booking appointments or flights. Researchers hope it will make fewer mistakes than today’s AI, or at least acknowledge doubt—something of a challenge for the current models, which can produce errors with apparent confidence, known as hallucinations.

AI chatbots run on underlying technology known as a large language model, or LLM. Consumers, businesses and governments already rely on them for everything from writing computer code to spiffing up marketing copy and planning parties. OpenAI’s is called GPT-4, the fourth LLM the company has developed since its 2015 founding.

While GPT-4 acted like a smart high-schooler, the eventual GPT-5 would effectively have a Ph.D. in some tasks, a former OpenAI executive said. Earlier this year, Altman told students in a talk at Stanford University that OpenAI could say with “a high degree of scientific certainty” that GPT-5 would be much smarter than the current model.

Microsoft Corporation (NASDAQ:MSFT) is reportedly planning to reduce its dependence on ChatGPT-maker OpenAI.

What Happened: Microsoft has been working on integrating internal and third-party artificial intelligence models into its AI product, Microsoft 365 Copilot, reported Reuters, citing sources familiar with the effort.

This move is a strategic step to diversify from the current underlying technology of OpenAI and reduce costs.

The Satya Nadella-led company is also decreasing 365 Copilot’s dependence on OpenAI due to concerns about cost and speed for enterprise users, the report noted, citing the sources.

A Microsoft spokesperson was quoted in the report saying that OpenAI continues to be the company’s partner on frontier models. “We incorporate various models from OpenAI and Microsoft depending on the product and experience.”

Big Tech is spending at a rate that’s never been seen, sparking boom times for companies scrambling to facilitate the AI build-out.

Why it matters: AI is changing the economy, but not in the way most people assume.

-

AI needs facilities and machines and power, and all of that has, in turn, fueled its own new spending involving real estate, building materials, semiconductors and energy.

-

Energy providers have seen a huge boost in particular, because data centers require as much power as a small city.

-

“Some of the greatest shifts in history are happening in certain industries,” Stephan Feldgoise, co-head of M&A for Goldman Sachs, tells Axios. “You have this whole convergence of tech, semiconductors, data centers, hyperscalers and power producers.”

Zoom out: Companies that are seeking fast growth into a nascent market typically spend on acquisitions.

-

Tech companies are competing for high-paid staff and spending freely on research.

-

But the key growth ingredient in the AI arms race so far is capital expenditure, or “capex.”

Capital expenditure is an old school accounting term for what a company spends on physical assets such as factories and equipment.

-

In the AI era, capex has come to signify what a company spends on data centers and the components they require.

-

The biggest tech players have increased their capex by tens of billions of dollars this year, and they show no signs of pulling back in 2025.

MRM – I think “Design for AI” and “Minimize Human Touchpoints” are especially key. Re #7, this is also true. Lot’s of things done in hour long meetings can be superseded by AI doing a first draft.

Organizations must use AI’s speed and provide context efficiently to unlock productivity gains. There also needs to be a framework that can maintain quality even at higher speeds. Several strategies jump out:

-

Massively increase the use of wikis and other written content.

Human organizations rarely codify their entire structure because the upfront cost and coordination are substantial. The ongoing effort to access and maintain such documentation is also significant. Asking co-workers questions or developing working relationships is usually more efficient and flexible.

Asking humans or developing relationships nullifies AI’s strength (speed) and exposes its greatest weakness (human context). Having the information in written form eliminates these issues. The cost of creating and maintaining these resources should fall with the help of AI.

I’ve written about how organizations already codify themselves as they automate with traditional software. Creating wikis and other written resources is essentially programming in natural language, which is more accessible and compact.

-

Move from reviews to standardized pre-approvals and surveillance.

Human organizations often prefer reviews as a checkpoint because creating a list of requirements is time-consuming, and they are commonly wrong. A simple review and release catches obvious problems and limits overhead and upfront investment. Reviews of this style are still relevant for many AI tasks where a human prompts the agent and then reviews the output.

AI could increase velocity for more complex and cross-functional projects by moving away from reviews. Waiting for human review from various teams is slow. Alternatively, AI agents can generate a list of requirements and unit tests for their specialty in a few minutes, considering more organizational context (now written) than humans can. Work that meets the pre-approval standards can continue, and then surveillance paired with graduated rollouts can detect if there are an unusual amount of errors.

Human organizations have a tradeoff between “waterfall” and “agile,” AI organizations can do both at once with minimal penalty, increasing iteration speed.

-

Use “Stop Work Authority” methods to ensure quality.

One of the most important components of the Toyota Production System is that every employee has “stop work authority.”” Any employee can, and is encouraged to, stop the line if they see an error or confusion. New processes might have many stops as employees work out the kinks, but things quickly line out. It is a very efficient bug-hunting method.

AI agents should have stop work authority. They can be effective in catching errors because they work in probabilities. Work stops when they cross a threshold of uncertainty. Waymo already does this with AI-driven taxis. The cars stop and consult human operators when confused.

An obvious need is a human operations team that can respond to these stoppages in seconds or minutes.

Issues are recorded and can be fixed permanently by adding to written context resources, retraining, altering procedures, or cleaning inputs.

-

Design for AI.

A concept called “Design for Manufacturing” is popular with manufacturing nerds and many leading companies. The idea is that some actions are much cheaper and defect-free than others. For instance, an injection molded plastic part with a shape that only allows installation one way will be a fraction of the cost of a CNC-cut metal part with an ambiguous installation orientation. The smart thing to do is design a product to use the plastic part instead of a metal one.

The same will be true of AI agents. Designing processes for their strengths will have immense value, especially in production, where errors are costly.

-

Cast a Wider Design Net.

The concept of “Design for AI” also applies at higher levels. Employees with the creativity for clever architectural designs are scarce resources. AI agents can help by providing analysis of many rabbit holes and iterations, helping less creative employees or supercharging the best.

The design phase has the most impact on downstream cost and productivity of any phase.

-

Minimize human touch points.

Human interaction significantly slows down any process and kills one of the primary AI advantages.

Written context is the first step in eliminating human touch points. Human workers can supervise the creation of the wikis instead of completing low-level work.

Pre-approvals are the next, so AI agents are not waiting for human sign-off.

AI decision probability thresholds, graduated rollouts, and unit tests can reduce the need for human inspection of work output.

-

Eliminate meeting culture.

Meetings help human organizations coordinate tasks and exchange context. Humans will continue to have meetings even in AI organizations.

The vast majority of lower-level meetings need to be cut. They lose their advantages once work completion times are compressed and context more widely available.

Meeting content moves from day-to-day operations to much higher-level questions about strategy and coordination. Humans might spend even more time in meetings if the organizational cadence increases so that strategies have to constantly adjust!

Once an icon of the 20th century seen as obsolete in the 21st, Encyclopaedia Britannica—now known as just Britannica— is all in on artificial intelligence, and may soon go public at a valuation of nearly $1 billion, according to the New York Times.

Until 2012 when printing ended, the company’s books served as the oldest continuously published, English-language encyclopedias in the world, essentially collecting all the world’s knowledge in one place before Google or Wikipedia were a thing. That has helped Britannica pivot into the AI age, where models benefit from access to high-quality, vetted information. More general-purpose models like ChatGPT suffer from hallucinations because they have hoovered up the entire internet, including all the junk and misinformation.

While it still offers an online edition of its encyclopedia, as well as the Merriam-Webster dictionary, Britannica’s biggest business today is selling online education software to schools and libraries, the software it hopes to supercharge with AI. That could mean using AI to customize learning plans for individual students. The idea is that students will enjoy learning more when software can help them understand the gaps in their understanding of a topic and stay on it longer. Another education tech company, Brainly, recently announced that answers from its chatbot will link to the exact learning materials (i.e. textbooks) they reference.

Britannica’s CEO Jorge Cauz also told the Times about the company’s Britannica AI chatbot, which allows users to ask questions about its vast database of encyclopedic knowledge that it collected over two centuries from vetted academics and editors. The company similarly offers chatbot software for customer service use cases.

Britannica told the Times it is expecting revenue to double from two years ago, to $100 million.

A company in the space of selling educational books that has seen its fortunes go the opposite direction is Chegg. The company has seen its stock price plummet almost in lock-step with the rise of OpenAI’s ChatGPT, as students canceled their subscriptions to its online knowledge platform.

A.I. hallucinations are reinvigorating the creative side of science. They speed the process by which scientists and inventors dream up new ideas and test them to see if reality concurs. It’s the scientific method — only supercharged. What once took years can now be done in days, hours and minutes. In some cases, the accelerated cycles of inquiry help scientists open new frontiers.

“We’re exploring,” said James J. Collins, an M.I.T. professor who recently praised hallucinations for speeding his research into novel antibiotics. “We’re asking the models to come up with completely new molecules.”

The A.I. hallucinations arise when scientists teach generative computer models about a particular subject and then let the machines rework that information. The results can range from subtle and wrongheaded to surreal. At times, they lead to major discoveries.

In October, David Baker of the University of Washington shared the Nobel Prize in Chemistry for his pioneering research on proteins — the knotty molecules that empower life. The Nobel committee praised him for discovering how to rapidly build completely new kinds of proteins not found in nature, calling his feat “almost impossible.”

In an interview before the prize announcement, Dr. Baker cited bursts of A.I. imaginings as central to “making proteins from scratch.” The new technology, he added, has helped his lab obtain roughly 100 patents, many for medical care. One is for a new way to treat cancer. Another seeks to aid the global war on viral infections. Dr. Baker has also founded or helped start more than 20 biotech companies.

Despite the allure of A.I. hallucinations for discovery, some scientists find the word itself misleading. They see the imaginings of generative A.I. models not as illusory but prospective — as having some chance of coming true, not unlike the conjectures made in the early stages of the scientific method. They see the term hallucination as inaccurate, and thus avoid using it.

The word also gets frowned on because it can evoke the bad old days of hallucinations from LSD and other psychedelic drugs, which scared off reputable scientists for decades. A final downside is that scientific and medical communications generated by A.I. can, like chatbot replies, get clouded by false information.

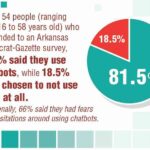

The rise of artificial intelligence (AI) has brought about numerous innovations that have revolutionized industries, from healthcare and education to finance and entertainment. However, alongside the seemingly limitless capabilities of ChatGPT and friends, we find a less-discussed consequence: the gradual decline of human cognitive skills. Unlike earlier tools such as calculators and spreadsheets, which made specific tasks easier without fundamentally altering our ability to think, AI is reshaping the way we process information and make decisions, often diminishing our reliance on our own cognitive abilities.

Tools like calculators and spreadsheets were designed to assist in specific tasks—such as arithmetic and data analysis—without fundamentally altering the way our brains process information. In fact, these tools still require us to understand the basics of the tasks at hand. For example, you need to understand what the formula does, and what output you are seeking, before you type it into Excel. While these tools simplified calculations, they did not erode our ability to think critically or engage in problem-solving – the tools simply made life easier. AI, on the other hand, is more complex in terms of its offerings – and cognitive impact. As AI becomes more prevalent, effectively “thinking” for us, scientists and business leaders are concerned about the larger effects on our cognitive skills.

The effects of AI on cognitive development are already being identified in schools across the United States. In a report titled, “Generative AI Can Harm Learning”, researchers at the University of Pennsylvania found that students who relied on AI for practice problems performed worse on tests compared to students who completed assignments without AI assistance. This suggests that the use of AI in academic settings is not just an issue of convenience, but may be contributing to a decline in critical thinking skills.

Furthermore, educational experts argue that AI’s increasing role in learning environments risks undermining the development of problem-solving abilities. Students are increasingly being taught to accept AI-generated answers without fully understanding the underlying processes or concepts. As AI becomes more ingrained in education, there is a concern that future generations may lack the capacity to engage in deeper intellectual exercises, relying on algorithms instead of their own analytical skills.

Using AI as a tool to augment human abilities, rather than replace them, is the solution. Enabling that solution is a function of collaboration, communication and connection – three things that capitalize on human cognitive abilities.

For leaders and aspiring leaders, we have to create cultures and opportunities for higher-level thinking skills. The key to working more effectively with AI is in first understanding how to work independently of AI, according to the National Institute of Health. Researchers at Stanford point to the importance of explanations: where AI shares not just outputs, but insights. Insights into how the ultimate conclusion was reached, described in simple terms that invite further inquiry (and independent thinking).

Whether through collaborative learning, complex problem-solving, or creative thinking exercises, the goal should be to create spaces where human intelligence remains at the center. Does that responsibility fall on learning and development (L&D), or HR, or marketing, sales, engineering… or the executive team? The answer is: yes. A dedication to the human operating system remains vital for even the most technologically-advanced organizations. AI should serve as a complement to, rather than a substitute for, human cognitive skills.

The role of agents will not just be the role of the teacher. Bill Salak observes that “AI agents will take on many responsibilities traditionally handled by human employees, from administrative tasks to more complex, analytical roles. This transition will result in a large-scale redefinition of how humans contribute” to the educational experience. Humans must focus on unique skills—creativity, strategic thinking, emotional intelligence, and adaptability. Roles will increasingly revolve around supervising, collaborating with, or augmenting the capabilities of AI agents.

Jay Patel, SVP & GM of Webex Customer Experience Solutions at Cisco, agrees that AI Agents will be everywhere. They will not just change the classroom experience for students and teachers but profoundly impact all domains. He notes that these AI models, including small language models, are “sophisticated enough to operate on individual devices, enabling users to have highly personalized virtual assistants.” These agents will be more efficient, attuned to individual needs, and, therefore, seemingly more intelligent.

Jay Patel predicts that “the adopted agents will embody the organization’s unique values, personalities, and purpose. This will ensure that the AIs interact in a deeply brand-aligned way.” This will drive a virtuous cycle, as AI agent interactions will not seem like they have been handed off to an untrained intern but rather to someone who knows all and only what they are supposed to know.

For AI agents to realize their full potential, the experience of interacting with them must feel natural. Casual, spoken interaction will be significant, as will the ability of the agent to understand the context in which a question is being asked.

Hassaan Raza, CEO of Tavus, feels that a “human layer” will enable AI agents to realize their full potential as teachers. Agents need to be relatable and able to interact with students in a manner that shows not just subject-domain knowledge but empathy. A robust interface for these agents will include video, allowing students to look the AI in the eye.

In January, thousands of New Hampshire voters picked up their phones to hear what sounded like President Biden telling Democrats not to vote in the state’s primary, just days away.

“We know the value of voting Democratic when our votes count. It’s important you save your vote for the November election,” the voice on the line said.

But it wasn’t Biden. It was a deepfake created with artificial intelligence — and the manifestation of fears that 2024’s global wave of elections would be manipulated with fake pictures, audio and video, due to rapid advances in generative AI technology.

“The nightmare situation was the day before, the day of election, the day after election, some bombshell image, some bombshell video or audio would just set the world on fire,” said Hany Farid, a professor at the University of California at Berkeley who studies manipulated media.

The Biden deepfake turned out to be commissioned by a Democratic political consultant who said he did it to raise alarms about AI. He was fined $6 million by the FCC and indicted on criminal charges in New Hampshire.

But as 2024 rolled on, the feared wave of deceptive, targeted deepfakes didn’t really materialize.

A pro-tech advocacy group has released a new report warning of the growing threat posed by China’s artificial intelligence technology and its open-source approach that could threaten the national and economic security of the United States.

The report, published by American Edge Project, states that “China is rapidly advancing its own open-source ecosystem as an alternative to American technology and using it as a Trojan horse to implant its CCP values into global infrastructure.”

“Their progress is both significant and concerning: Chinese-developed open-source AI tools are already outperforming Western models on key benchmarks, while operating at dramatically lower costs, accelerating global adoption. Through its Belt and Road Initiative (BRI), which spans more than 155 countries on four continents, and its Digital Silk Road (DSR), China is exporting its technology worldwide, fostering increased global dependence, undermining democratic norms, and threatening U.S. leadership and global security.”

A Ukrainian national guard brigade just orchestrated an all-robot combined-arms operation, mixing crawling and flying drones for an assault on Russian positions in Kharkiv Oblast in northern Russia.

“We are talking about dozens of units of robotic and unmanned equipment simultaneously on a small section of the front,” a spokesperson for the 13th National Guard Brigade explained.

It was an impressive technological feat—and a worrying sign of weakness on the part of overstretched Ukrainian forces. Unmanned ground vehicles in particular suffer profound limitations, and still can’t fully replace human infantry.

That the 13th National Guard Brigade even needed to replace all of the human beings in a ground assault speaks to how few people the brigade has compared to the Russian units it’s fighting. The 13th National Guard Brigade defends a five-mile stretch of the front line around the town of Hlyboke, just south of the Ukraine-Russia border. It’s holding back a force of no fewer than four Russian regiments.

Noticias

Revivir el compromiso en el aula de español: un desafío musical con chatgpt – enfoque de la facultad

Noticias

5 indicaciones de chatgpt que pueden ayudar a los adolescentes a lanzar una startup

Teen emprendedor que usa chatgpt para ayudarlo con su negocio

El emprendimiento adolescente sigue en aumento. Según Junior Achievement Research, el 66% de los adolescentes estadounidenses de entre 13 y 17 años dicen que es probable que considere comenzar un negocio como adultos, con el monitor de emprendimiento global 2023-2024 que encuentra que el 24% de los jóvenes de 18 a 24 años son actualmente empresarios. Estos jóvenes fundadores no son solo soñando, están construyendo empresas reales que generan ingresos y crean un impacto social, y están utilizando las indicaciones de ChatGPT para ayudarlos.

En Wit (lo que sea necesario), la organización que fundó en 2009, hemos trabajado con más de 10,000 jóvenes empresarios. Durante el año pasado, he observado un cambio en cómo los adolescentes abordan la planificación comercial. Con nuestra orientación, están utilizando herramientas de IA como ChatGPT, no como atajos, sino como socios de pensamiento estratégico para aclarar ideas, probar conceptos y acelerar la ejecución.

Los emprendedores adolescentes más exitosos han descubierto indicaciones específicas que los ayudan a pasar de una idea a otra. Estas no son sesiones genéricas de lluvia de ideas: están utilizando preguntas específicas que abordan los desafíos únicos que enfrentan los jóvenes fundadores: recursos limitados, compromisos escolares y la necesidad de demostrar sus conceptos rápidamente.

Aquí hay cinco indicaciones de ChatGPT que ayudan constantemente a los emprendedores adolescentes a construir negocios que importan.

1. El problema del primer descubrimiento chatgpt aviso

“Me doy cuenta de que [specific group of people]

luchar contra [specific problem I’ve observed]. Ayúdame a entender mejor este problema explicando: 1) por qué existe este problema, 2) qué soluciones existen actualmente y por qué son insuficientes, 3) cuánto las personas podrían pagar para resolver esto, y 4) tres formas específicas en que podría probar si este es un problema real que vale la pena resolver “.

Un adolescente podría usar este aviso después de notar que los estudiantes en la escuela luchan por pagar el almuerzo. En lugar de asumir que entienden el alcance completo, podrían pedirle a ChatGPT que investigue la deuda del almuerzo escolar como un problema sistémico. Esta investigación puede llevarlos a crear un negocio basado en productos donde los ingresos ayuden a pagar la deuda del almuerzo, lo que combina ganancias con el propósito.

Los adolescentes notan problemas de manera diferente a los adultos porque experimentan frustraciones únicas, desde los desafíos de las organizaciones escolares hasta las redes sociales hasta las preocupaciones ambientales. Según la investigación de Square sobre empresarios de la Generación de la Generación Z, el 84% planea ser dueños de negocios dentro de cinco años, lo que los convierte en candidatos ideales para las empresas de resolución de problemas.

2. El aviso de chatgpt de chatgpt de chatgpt de realidad de la realidad del recurso

“Soy [age] años con aproximadamente [dollar amount] invertir y [number] Horas por semana disponibles entre la escuela y otros compromisos. Según estas limitaciones, ¿cuáles son tres modelos de negocio que podría lanzar de manera realista este verano? Para cada opción, incluya costos de inicio, requisitos de tiempo y los primeros tres pasos para comenzar “.

Este aviso se dirige al elefante en la sala: la mayoría de los empresarios adolescentes tienen dinero y tiempo limitados. Cuando un empresario de 16 años emplea este enfoque para evaluar un concepto de negocio de tarjetas de felicitación, puede descubrir que pueden comenzar con $ 200 y escalar gradualmente. Al ser realistas sobre las limitaciones por adelantado, evitan el exceso de compromiso y pueden construir hacia objetivos de ingresos sostenibles.

Según el informe de Gen Z de Square, el 45% de los jóvenes empresarios usan sus ahorros para iniciar negocios, con el 80% de lanzamiento en línea o con un componente móvil. Estos datos respaldan la efectividad de la planificación basada en restricciones: cuando funcionan los adolescentes dentro de las limitaciones realistas, crean modelos comerciales más sostenibles.

3. El aviso de chatgpt del simulador de voz del cliente

“Actúa como un [specific demographic] Y dame comentarios honestos sobre esta idea de negocio: [describe your concept]. ¿Qué te excitaría de esto? ¿Qué preocupaciones tendrías? ¿Cuánto pagarías de manera realista? ¿Qué necesitaría cambiar para que se convierta en un cliente? “

Los empresarios adolescentes a menudo luchan con la investigación de los clientes porque no pueden encuestar fácilmente a grandes grupos o contratar firmas de investigación de mercado. Este aviso ayuda a simular los comentarios de los clientes haciendo que ChatGPT adopte personas específicas.

Un adolescente que desarrolla un podcast para atletas adolescentes podría usar este enfoque pidiéndole a ChatGPT que responda a diferentes tipos de atletas adolescentes. Esto ayuda a identificar temas de contenido que resuenan y mensajes que se sienten auténticos para el público objetivo.

El aviso funciona mejor cuando se vuelve específico sobre la demografía, los puntos débiles y los contextos. “Actúa como un estudiante de último año de secundaria que solicita a la universidad” produce mejores ideas que “actuar como un adolescente”.

4. El mensaje mínimo de diseñador de prueba viable chatgpt

“Quiero probar esta idea de negocio: [describe concept] sin gastar más de [budget amount] o más de [time commitment]. Diseñe tres experimentos simples que podría ejecutar esta semana para validar la demanda de los clientes. Para cada prueba, explique lo que aprendería, cómo medir el éxito y qué resultados indicarían que debería avanzar “.

Este aviso ayuda a los adolescentes a adoptar la metodología Lean Startup sin perderse en la jerga comercial. El enfoque en “This Week” crea urgencia y evita la planificación interminable sin acción.

Un adolescente que desea probar un concepto de línea de ropa podría usar este indicador para diseñar experimentos de validación simples, como publicar maquetas de diseño en las redes sociales para evaluar el interés, crear un formulario de Google para recolectar pedidos anticipados y pedirles a los amigos que compartan el concepto con sus redes. Estas pruebas no cuestan nada más que proporcionar datos cruciales sobre la demanda y los precios.

5. El aviso de chatgpt del generador de claridad de tono

“Convierta esta idea de negocio en una clara explicación de 60 segundos: [describe your business]. La explicación debe incluir: el problema que resuelve, su solución, a quién ayuda, por qué lo elegirían sobre las alternativas y cómo se ve el éxito. Escríbelo en lenguaje de conversación que un adolescente realmente usaría “.

La comunicación clara separa a los empresarios exitosos de aquellos con buenas ideas pero una ejecución deficiente. Este aviso ayuda a los adolescentes a destilar conceptos complejos a explicaciones convincentes que pueden usar en todas partes, desde las publicaciones en las redes sociales hasta las conversaciones con posibles mentores.

El énfasis en el “lenguaje de conversación que un adolescente realmente usaría” es importante. Muchas plantillas de lanzamiento comercial suenan artificiales cuando se entregan jóvenes fundadores. La autenticidad es más importante que la jerga corporativa.

Más allá de las indicaciones de chatgpt: estrategia de implementación

La diferencia entre los adolescentes que usan estas indicaciones de manera efectiva y aquellos que no se reducen a seguir. ChatGPT proporciona dirección, pero la acción crea resultados.

Los jóvenes empresarios más exitosos con los que trabajo usan estas indicaciones como puntos de partida, no de punto final. Toman las sugerencias generadas por IA e inmediatamente las prueban en el mundo real. Llaman a clientes potenciales, crean prototipos simples e iteran en función de los comentarios reales.

Investigaciones recientes de Junior Achievement muestran que el 69% de los adolescentes tienen ideas de negocios, pero se sienten inciertos sobre el proceso de partida, con el miedo a que el fracaso sea la principal preocupación para el 67% de los posibles empresarios adolescentes. Estas indicaciones abordan esa incertidumbre al desactivar los conceptos abstractos en los próximos pasos concretos.

La imagen más grande

Los emprendedores adolescentes que utilizan herramientas de IA como ChatGPT representan un cambio en cómo está ocurriendo la educación empresarial. Según la investigación mundial de monitores empresariales, los jóvenes empresarios tienen 1,6 veces más probabilidades que los adultos de querer comenzar un negocio, y son particularmente activos en la tecnología, la alimentación y las bebidas, la moda y los sectores de entretenimiento. En lugar de esperar clases de emprendimiento formales o programas de MBA, estos jóvenes fundadores están accediendo a herramientas de pensamiento estratégico de inmediato.

Esta tendencia se alinea con cambios más amplios en la educación y la fuerza laboral. El Foro Económico Mundial identifica la creatividad, el pensamiento crítico y la resiliencia como las principales habilidades para 2025, la capacidad de las capacidades que el espíritu empresarial desarrolla naturalmente.

Programas como WIT brindan soporte estructurado para este viaje, pero las herramientas en sí mismas se están volviendo cada vez más accesibles. Un adolescente con acceso a Internet ahora puede acceder a recursos de planificación empresarial que anteriormente estaban disponibles solo para empresarios establecidos con presupuestos significativos.

La clave es usar estas herramientas cuidadosamente. ChatGPT puede acelerar el pensamiento y proporcionar marcos, pero no puede reemplazar el arduo trabajo de construir relaciones, crear productos y servir a los clientes. La mejor idea de negocio no es la más original, es la que resuelve un problema real para personas reales. Las herramientas de IA pueden ayudar a identificar esas oportunidades, pero solo la acción puede convertirlos en empresas que importan.

Noticias

Chatgpt vs. gemini: he probado ambos, y uno definitivamente es mejor

Precio

ChatGPT y Gemini tienen versiones gratuitas que limitan su acceso a características y modelos. Los planes premium para ambos también comienzan en alrededor de $ 20 por mes. Las características de chatbot, como investigaciones profundas, generación de imágenes y videos, búsqueda web y más, son similares en ChatGPT y Gemini. Sin embargo, los planes de Gemini pagados también incluyen el almacenamiento en la nube de Google Drive (a partir de 2TB) y un conjunto robusto de integraciones en las aplicaciones de Google Workspace.

Los niveles de más alta gama de ChatGPT y Gemini desbloquean el aumento de los límites de uso y algunas características únicas, pero el costo mensual prohibitivo de estos planes (como $ 200 para Chatgpt Pro o $ 250 para Gemini Ai Ultra) los pone fuera del alcance de la mayoría de las personas. Las características específicas del plan Pro de ChatGPT, como el modo O1 Pro que aprovecha el poder de cálculo adicional para preguntas particularmente complicadas, no son especialmente relevantes para el consumidor promedio, por lo que no sentirá que se está perdiendo. Sin embargo, es probable que desee las características que son exclusivas del plan Ai Ultra de Gemini, como la generación de videos VEO 3.

Ganador: Géminis

Plataformas

Puede acceder a ChatGPT y Gemini en la web o a través de aplicaciones móviles (Android e iOS). ChatGPT también tiene aplicaciones de escritorio (macOS y Windows) y una extensión oficial para Google Chrome. Gemini no tiene aplicaciones de escritorio dedicadas o una extensión de Chrome, aunque se integra directamente con el navegador.

(Crédito: OpenAI/PCMAG)

Chatgpt está disponible en otros lugares, Como a través de Siri. Como se mencionó, puede acceder a Gemini en las aplicaciones de Google, como el calendario, Documento, ConducirGmail, Mapas, Mantener, FotosSábanas, y Música de YouTube. Tanto los modelos de Chatgpt como Gemini también aparecen en sitios como la perplejidad. Sin embargo, obtiene la mayor cantidad de funciones de estos chatbots en sus aplicaciones y portales web dedicados.

Las interfaces de ambos chatbots son en gran medida consistentes en todas las plataformas. Son fáciles de usar y no lo abruman con opciones y alternar. ChatGPT tiene algunas configuraciones más para jugar, como la capacidad de ajustar su personalidad, mientras que la profunda interfaz de investigación de Gemini hace un mejor uso de los bienes inmuebles de pantalla.

Ganador: empate

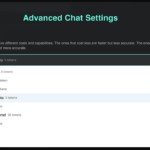

Modelos de IA

ChatGPT tiene dos series primarias de modelos, la serie 4 (su línea de conversación, insignia) y la Serie O (su compleja línea de razonamiento). Gemini ofrece de manera similar una serie Flash de uso general y una serie Pro para tareas más complicadas.

Los últimos modelos de Chatgpt son O3 y O4-Mini, y los últimos de Gemini son 2.5 Flash y 2.5 Pro. Fuera de la codificación o la resolución de una ecuación, pasará la mayor parte de su tiempo usando los modelos de la serie 4-Series y Flash. A continuación, puede ver cómo funcionan estos modelos en una variedad de tareas. Qué modelo es mejor depende realmente de lo que quieras hacer.

Ganador: empate

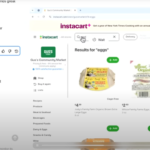

Búsqueda web

ChatGPT y Gemini pueden buscar información actualizada en la web con facilidad. Sin embargo, ChatGPT presenta mosaicos de artículos en la parte inferior de sus respuestas para una lectura adicional, tiene un excelente abastecimiento que facilita la vinculación de reclamos con evidencia, incluye imágenes en las respuestas cuando es relevante y, a menudo, proporciona más detalles en respuesta. Gemini no muestra nombres de fuente y títulos de artículos completos, e incluye mosaicos e imágenes de artículos solo cuando usa el modo AI de Google. El abastecimiento en este modo es aún menos robusto; Google relega las fuentes a los caretes que se pueden hacer clic que no resaltan las partes relevantes de su respuesta.

Como parte de sus experiencias de búsqueda en la web, ChatGPT y Gemini pueden ayudarlo a comprar. Si solicita consejos de compra, ambos presentan mosaicos haciendo clic en enlaces a los minoristas. Sin embargo, Gemini generalmente sugiere mejores productos y tiene una característica única en la que puede cargar una imagen tuya para probar digitalmente la ropa antes de comprar.

Ganador: chatgpt

Investigación profunda

ChatGPT y Gemini pueden generar informes que tienen docenas de páginas e incluyen más de 50 fuentes sobre cualquier tema. La mayor diferencia entre los dos se reduce al abastecimiento. Gemini a menudo cita más fuentes que CHATGPT, pero maneja el abastecimiento en informes de investigación profunda de la misma manera que lo hace en la búsqueda en modo AI, lo que significa caretas que se puede hacer clic sin destacados en el texto. Debido a que es más difícil conectar las afirmaciones en los informes de Géminis a fuentes reales, es más difícil creerles. El abastecimiento claro de ChatGPT con destacados en el texto es más fácil de confiar. Sin embargo, Gemini tiene algunas características de calidad de vida en ChatGPT, como la capacidad de exportar informes formateados correctamente a Google Docs con un solo clic. Su tono también es diferente. Los informes de ChatGPT se leen como publicaciones de foro elaboradas, mientras que los informes de Gemini se leen como documentos académicos.

Ganador: chatgpt

Generación de imágenes

La generación de imágenes de ChatGPT impresiona independientemente de lo que solicite, incluso las indicaciones complejas para paneles o diagramas cómicos. No es perfecto, pero los errores y la distorsión son mínimos. Gemini genera imágenes visualmente atractivas más rápido que ChatGPT, pero rutinariamente incluyen errores y distorsión notables. Con indicaciones complicadas, especialmente diagramas, Gemini produjo resultados sin sentido en las pruebas.

Arriba, puede ver cómo ChatGPT (primera diapositiva) y Géminis (segunda diapositiva) les fue con el siguiente mensaje: “Genere una imagen de un estudio de moda con una decoración simple y rústica que contrasta con el espacio más agradable. Incluya un sofá marrón y paredes de ladrillo”. La imagen de ChatGPT limita los problemas al detalle fino en las hojas de sus plantas y texto en su libro, mientras que la imagen de Gemini muestra problemas más notables en su tubo de cordón y lámpara.

Ganador: chatgpt

¡Obtenga nuestras mejores historias!

Toda la última tecnología, probada por nuestros expertos

Regístrese en el boletín de informes de laboratorio para recibir las últimas revisiones de productos de PCMAG, comprar asesoramiento e ideas.

Al hacer clic en Registrarme, confirma que tiene más de 16 años y acepta nuestros Términos de uso y Política de privacidad.

¡Gracias por registrarse!

Su suscripción ha sido confirmada. ¡Esté atento a su bandeja de entrada!

Generación de videos

La generación de videos de Gemini es la mejor de su clase, especialmente porque ChatGPT no puede igualar su capacidad para producir audio acompañante. Actualmente, Google bloquea el último modelo de generación de videos de Gemini, VEO 3, detrás del costoso plan AI Ultra, pero obtienes más videos realistas que con ChatGPT. Gemini también tiene otras características que ChatGPT no, como la herramienta Flow Filmmaker, que le permite extender los clips generados y el animador AI Whisk, que le permite animar imágenes fijas. Sin embargo, tenga en cuenta que incluso con VEO 3, aún necesita generar videos varias veces para obtener un gran resultado.

En el ejemplo anterior, solicité a ChatGPT y Gemini a mostrarme un solucionador de cubos de Rubik Rubik que resuelva un cubo. La persona en el video de Géminis se ve muy bien, y el audio acompañante es competente. Al final, hay una buena atención al detalle con el marco que se desplaza, simulando la detención de una grabación de selfies. Mientras tanto, Chatgpt luchó con su cubo, distorsionándolo en gran medida.

Ganador: Géminis

Procesamiento de archivos

Comprender los archivos es una fortaleza de ChatGPT y Gemini. Ya sea que desee que respondan preguntas sobre un manual, editen un currículum o le informen algo sobre una imagen, ninguno decepciona. Sin embargo, ChatGPT tiene la ventaja sobre Gemini, ya que ofrece un reconocimiento de imagen ligeramente mejor y respuestas más detalladas cuando pregunta sobre los archivos cargados. Ambos chatbots todavía a veces inventan citas de documentos proporcionados o malinterpretan las imágenes, así que asegúrese de verificar sus resultados.

Ganador: chatgpt

Escritura creativa

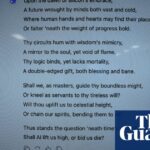

Chatgpt y Gemini pueden generar poemas, obras, historias y más competentes. CHATGPT, sin embargo, se destaca entre los dos debido a cuán únicas son sus respuestas y qué tan bien responde a las indicaciones. Las respuestas de Gemini pueden sentirse repetitivas si no calibra cuidadosamente sus solicitudes, y no siempre sigue todas las instrucciones a la carta.

En el ejemplo anterior, solicité ChatGPT (primera diapositiva) y Gemini (segunda diapositiva) con lo siguiente: “Sin hacer referencia a nada en su memoria o respuestas anteriores, quiero que me escriba un poema de verso gratuito. Preste atención especial a la capitalización, enjambment, ruptura de línea y puntuación. Dado que es un verso libre, no quiero un medidor familiar o un esquema de retiro de la rima, pero quiero que tenga un estilo de coohes. ChatGPT logró entregar lo que pedí en el aviso, y eso era distinto de las generaciones anteriores. Gemini tuvo problemas para generar un poema que incorporó cualquier cosa más allá de las comas y los períodos, y su poema anterior se lee de manera muy similar a un poema que generó antes.

Recomendado por nuestros editores

Ganador: chatgpt

Razonamiento complejo

Los modelos de razonamiento complejos de Chatgpt y Gemini pueden manejar preguntas de informática, matemáticas y física con facilidad, así como mostrar de manera competente su trabajo. En las pruebas, ChatGPT dio respuestas correctas un poco más a menudo que Gemini, pero su rendimiento es bastante similar. Ambos chatbots pueden y le darán respuestas incorrectas, por lo que verificar su trabajo aún es vital si está haciendo algo importante o tratando de aprender un concepto.

Ganador: chatgpt

Integración

ChatGPT no tiene integraciones significativas, mientras que las integraciones de Gemini son una característica definitoria. Ya sea que desee obtener ayuda para editar un ensayo en Google Docs, comparta una pestaña Chrome para hacer una pregunta, pruebe una nueva lista de reproducción de música de YouTube personalizada para su gusto o desbloquee ideas personales en Gmail, Gemini puede hacer todo y mucho más. Es difícil subestimar cuán integrales y poderosas son realmente las integraciones de Géminis.

Ganador: Géminis

Asistentes de IA

ChatGPT tiene GPT personalizados, y Gemini tiene gemas. Ambos son asistentes de IA personalizables. Tampoco es una gran actualización sobre hablar directamente con los chatbots, pero los GPT personalizados de terceros agregan una nueva funcionalidad, como el fácil acceso a Canva para editar imágenes generadas. Mientras tanto, terceros no pueden crear gemas, y no puedes compartirlas. Puede permitir que los GPT personalizados accedan a la información externa o tomen acciones externas, pero las GEM no tienen una funcionalidad similar.

Ganador: chatgpt

Contexto Windows y límites de uso

La ventana de contexto de ChatGPT sube a 128,000 tokens en sus planes de nivel superior, y todos los planes tienen límites de uso dinámicos basados en la carga del servidor. Géminis, por otro lado, tiene una ventana de contexto de 1,000,000 token. Google no está demasiado claro en los límites de uso exactos para Gemini, pero también son dinámicos dependiendo de la carga del servidor. Anecdóticamente, no pude alcanzar los límites de uso usando los planes pagados de Chatgpt o Gemini, pero es mucho más fácil hacerlo con los planes gratuitos.

Ganador: Géminis

Privacidad

La privacidad en Chatgpt y Gemini es una bolsa mixta. Ambos recopilan cantidades significativas de datos, incluidos todos sus chats, y usan esos datos para capacitar a sus modelos de IA de forma predeterminada. Sin embargo, ambos le dan la opción de apagar el entrenamiento. Google al menos no recopila y usa datos de Gemini para fines de capacitación en aplicaciones de espacio de trabajo, como Gmail, de forma predeterminada. ChatGPT y Gemini también prometen no vender sus datos o usarlos para la orientación de anuncios, pero Google y OpenAI tienen historias sórdidas cuando se trata de hacks, filtraciones y diversos fechorías digitales, por lo que recomiendo no compartir nada demasiado sensible.

Ganador: empate

-

Startups2 años ago

Startups2 años agoRemove.bg: La Revolución en la Edición de Imágenes que Debes Conocer

-

Tutoriales2 años ago

Tutoriales2 años agoCómo Comenzar a Utilizar ChatGPT: Una Guía Completa para Principiantes

-

Startups2 años ago

Startups2 años agoStartups de IA en EE.UU. que han recaudado más de $100M en 2024

-

Startups2 años ago

Startups2 años agoDeepgram: Revolucionando el Reconocimiento de Voz con IA

-

Recursos2 años ago

Recursos2 años agoCómo Empezar con Popai.pro: Tu Espacio Personal de IA – Guía Completa, Instalación, Versiones y Precios

-

Recursos2 años ago

Recursos2 años agoPerplexity aplicado al Marketing Digital y Estrategias SEO

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial Aplicada de 4Geeks Academy 2024

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial de UC Berkeley estratégico para negocios