Noticias

Latest Features, Pros, and Cons

Published

1 año agoon

eWEEK content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More.

ChatGPT’s Fast Facts

Our product rating: 4/5

Pricing: Free version; $20 monthly for paid plan

Key features:

- Analyzing uploaded images

- Generating high-quality generative content

- Creating videos with third-party applications

- Generating scripts, articles, and other text

- Automating tasks with ChatGPT-powered applications

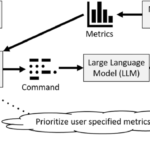

ChatGPT is an advanced conversational AI developed by OpenAI to act as a functional assistant in a range of activities, including answering questions and generating creative content. It uses a large language model (LLM) trained on diverse datasets to engage in sophisticated conversations, provide technical help, and tell stories. Its ability to identify context and nuance helps it stand out from other chatbots, with human-like responses.

ChatGPT’s Pricing

ChatGPT has a free version that allows users to access most of its integrated applications within the platform. Users have full access to GPT-4o mini and limited access to GPT-4. ChatGPT’s paid version costs $20 per month and includes new features plus access to OpenAI o1-preview, OpenAI o1-mini, GPT-4o, GPT-4o mini, and GPT-4; up to five times more messages for GPT‑4o; access to data analysis, file uploads, vision, web browsing, and image generation; and advanced voice mode.

ChatGPT’s Key Features

ChatGPT provides a set of robust features aimed at increasing efficiency and creativity across a variety of jobs. It can create and analyze images, making it an excellent choice for visual projects and data insights. ChatGPT allows you to create detailed plans and strategies, brainstorm ideas, and generate actionable solutions. It can write code for technical tasks, saving developers time and creating clear, compelling writing for every occasion. ChatGPT can also summarize long texts into shorter and more digestible information.

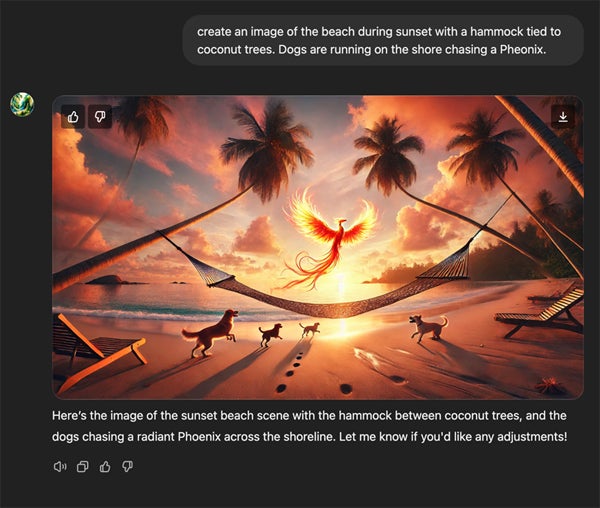

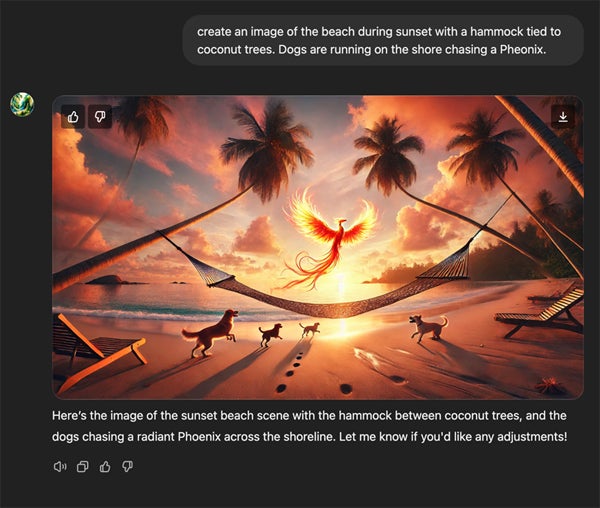

Creating Images

ChatGPT’s generative AI feature lets you create images using text prompts like other AI art tools. For the image below, I asked ChatGPT to create an image of a beach during sunset with a hammock tied to coconut trees and dogs chasing a Phoenix around the shore. It generated an image almost instantly with visuals close to what I pictured. More specific prompts would likely have fine-tuned the image even further.

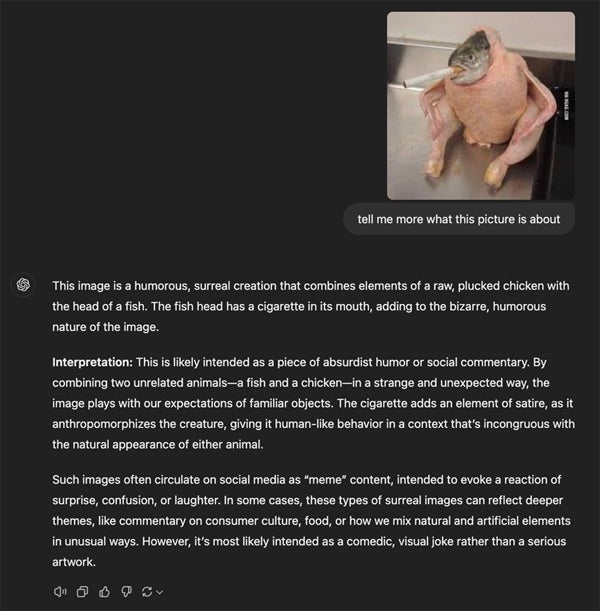

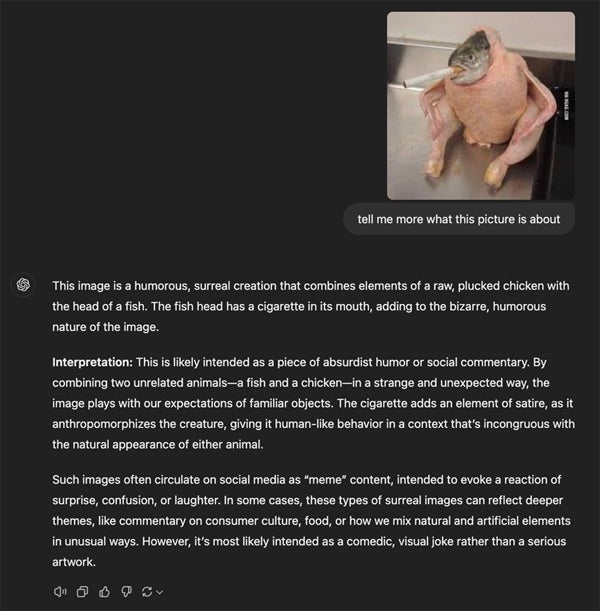

Analyzing Images

To test the tool’s ability to use its multimodal nature to analyze images it did not create, I uploaded an image of a cigarette-smoking fish with a chicken’s body and asked ChatGPT for its interpretation. It described the image as a “humorous, surreal creation… likely intended as a piece of absurdist humor or social commentary” and identified it as possibly a meme.

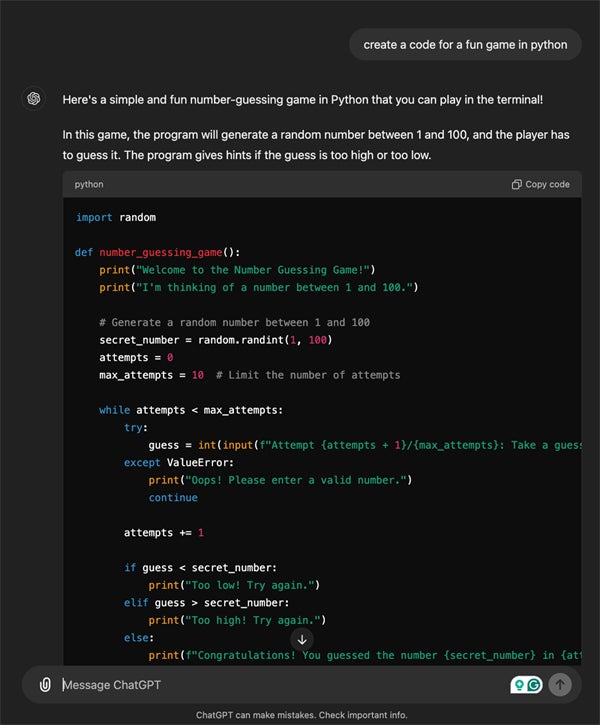

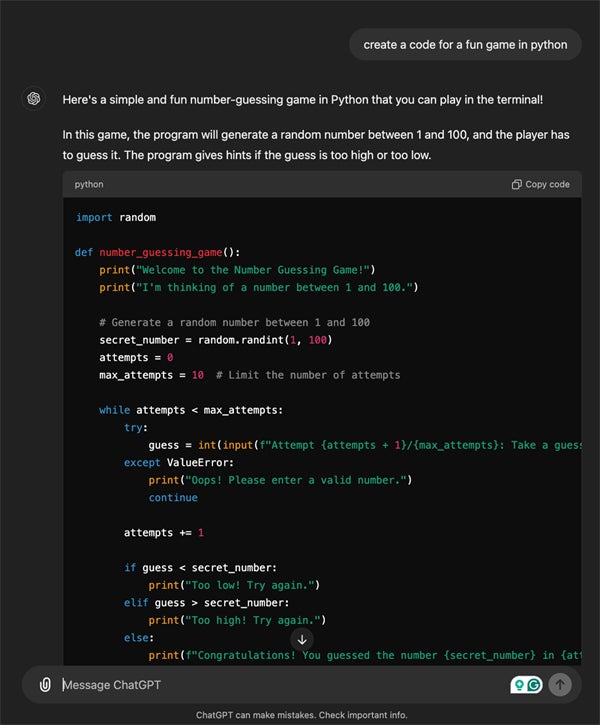

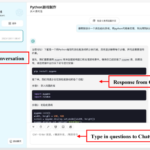

Writing Code for Programming

ChatGPT’s ability to generate programming code can be both effective and, occasionally, challenging. It frequently generates useful code that may be directly applied to tasks ranging from simple scripts to sophisticated programs, but in some cases, the initial result may require extra prompts or revisions to match the specific needs of your application or project. In short, ChatGPT-generated code may function properly out of the box in some circumstances, but it may also require refining or debugging to solve specific edge cases, maximize efficiency, or before being integrated into a broader codebase. This iterative method can lead to good code solutions but may require some back-and-forth to obtain the desired results.

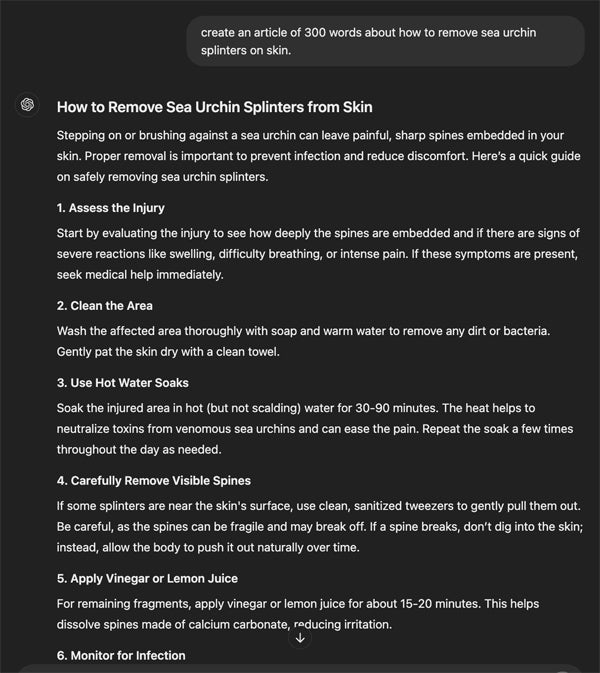

Writing Short and Long-Form Content

Content writing is one of ChatGPT’s specialties. This includes long-form content like articles, book chapters, and case studies or shorter content like social media descriptions, templates, and newsletter items. The clarity of this content will hinge on the accuracy of your prompts. In the example below, I gave a simple prompt with a topic and word count. You’ll want to proofread or fact-check ChatGPT’s work to avoid potential plagiarism, correct inaccuracies, and make the content sound more human in origin.

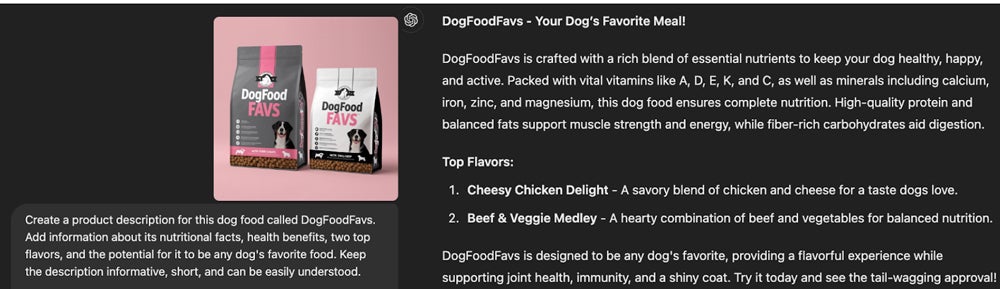

Writing Product Descriptions

ChatGPT’s ability to create product descriptions can be used for a social media campaign or a product page on an e-commerce site. I tested its ability to create product descriptions by uploading a mock image of a dog food brand and asking it to create a description with information about health benefits and flavors. In addition, I prompted it to make the description sound more informative so that it could be easily understood. ChatGPT’s generated response turned out better than I expected by far. It gave me a good hook, body, and dog food flavors, and a call to action at the end of the description.

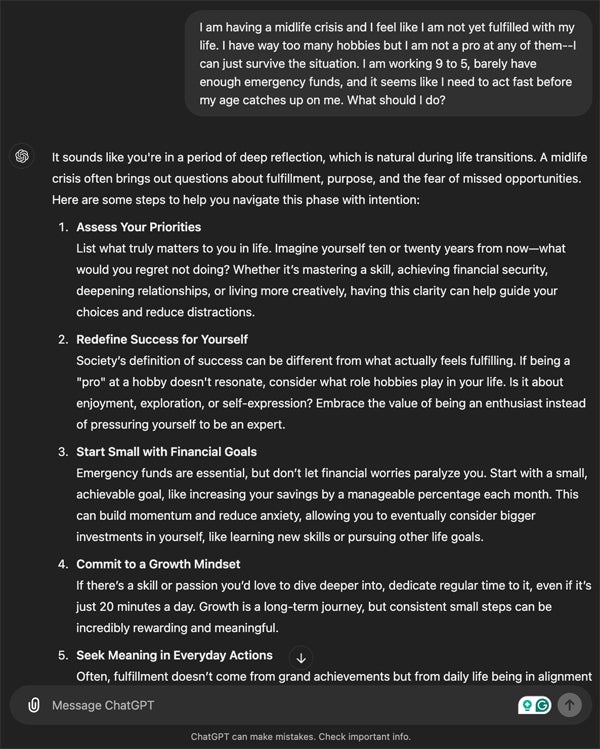

Analyzing Complex Context

ChatGPT can analyze contextual data and make suggestions based on different scenarios, making it useful for various tasks. Its responses are generated using the vast dataset on which it was trained. This dataset comprises a wide range of human language subjects and patterns up to the knowledge cutoff date of October 2023.

ChatGPT uses its basic understanding to identify patterns and replicate conversational context, allowing it to make assumptions about human intention, provide clarifications, and participate in interactive discussions. However, it does not have real-time access to current events or personal user information. As a result, ChatGPT’s generated answers are created using general knowledge rather than real-time changes, making it particularly suited for static information, creative brainstorming, problem-solving, and explaining concepts in depth.

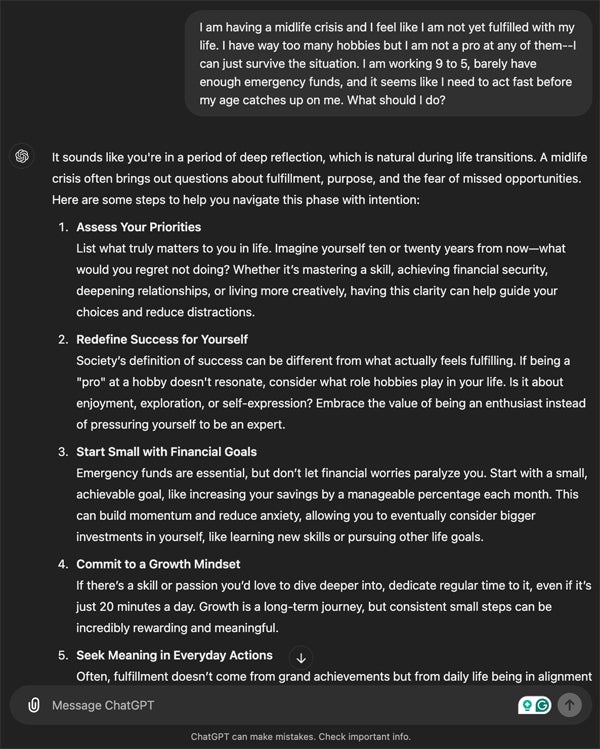

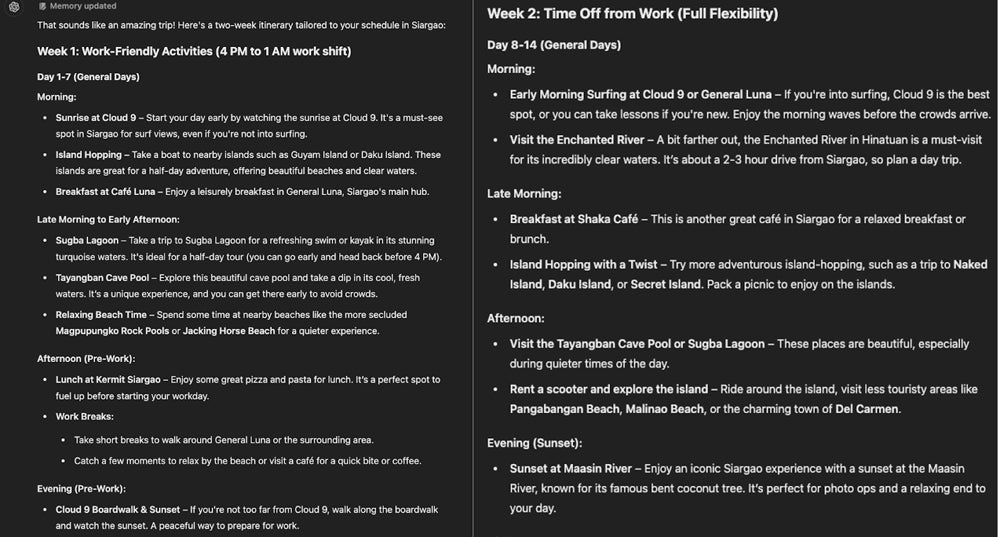

Making Travel Plans

I tested ChatGPT by asking it to plan a trip to Siargao in the Philippines. In one scenario, I will work the first week while on the island; in the second, I have time off work with full flexibility regarding activities. ChatGPT generated itineraries suitable for both scenarios. The places it suggested I visit are all real and still in existence and all the activities are doable and popular with Siargao visitors.

During the work week, ChatGPT suggested famous places for morning or evening visits so that I could still commit to my working hours. ChatGPT organized activities like island hopping, surfing, and exploring scenic places (including the Sugba Lagoon and Secret Island) for the fully flexible week.

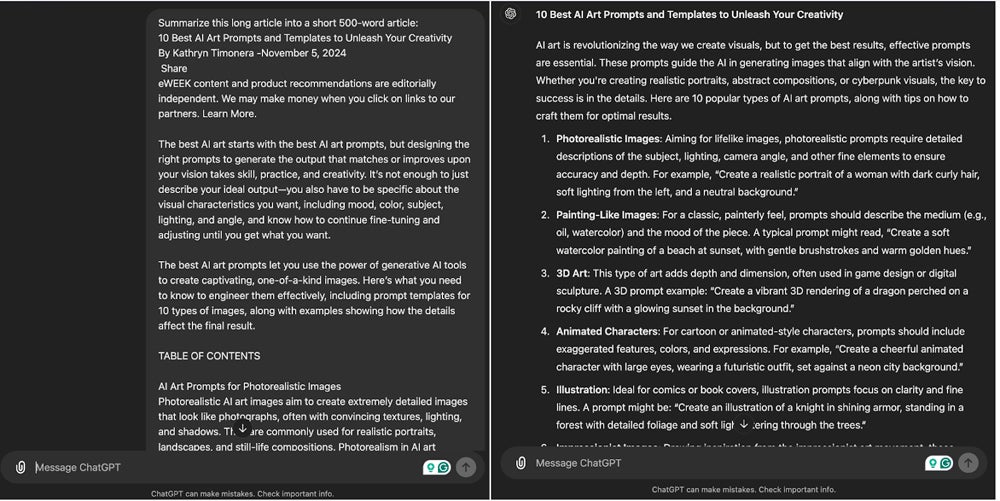

Summarizing Long Texts

In addition to writing articles and descriptions, ChatGPT can help summarize long-form content into shorter, easy-to-read paragraphs. I asked it to summarize eWeek’s 10 Best AI Art Prompts article, and in a few seconds, it narrowed down the prompts and examples and gave me a concise summary. This function saves time and is useful for quickly understanding complex or extensive information. ChatGPT helps readers focus on important takeaways, making it valuable for professionals, students, and creatives who need to quickly absorb knowledge from several sources. It can be applied to various research papers, instructional articles, and creative resources.

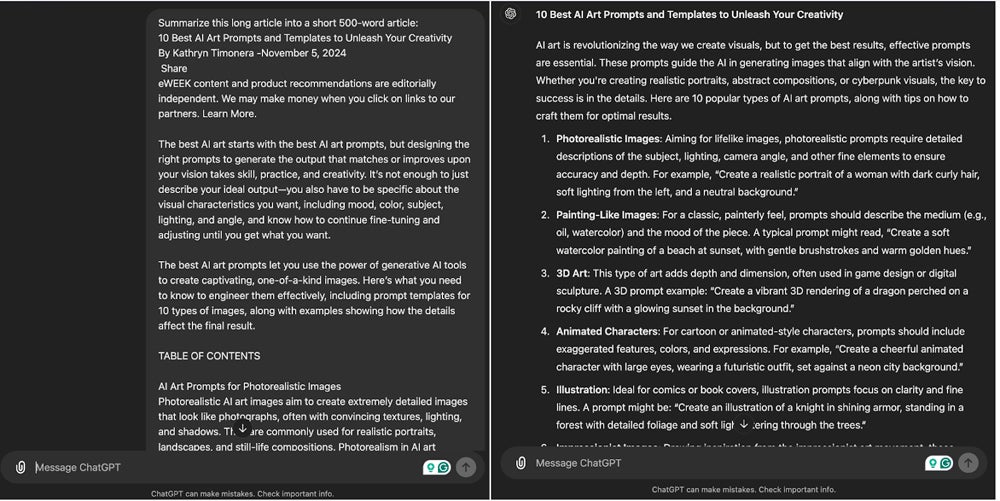

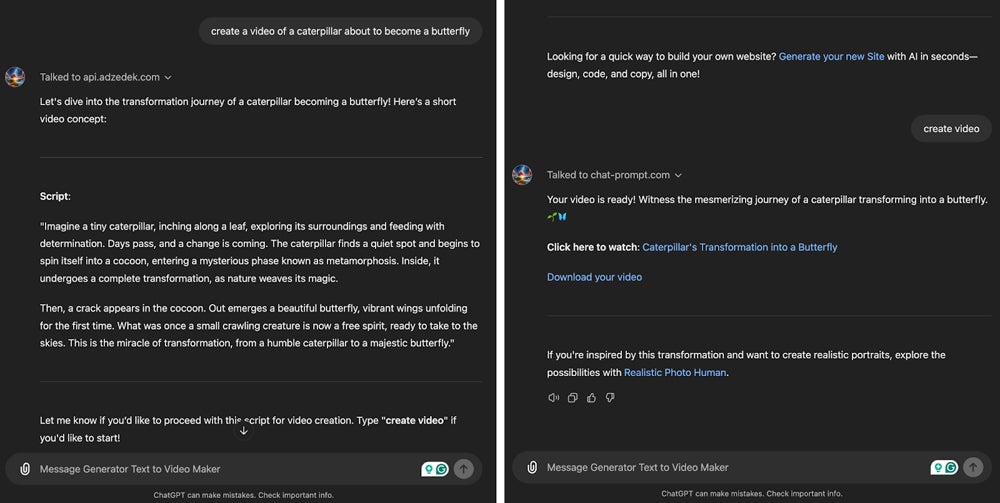

Turning Texts into a Video

ChatGPT’s video production feature is powered by an app from the ChatGPT application store that links smoothly to the api.adzedek.com API. Provide a brief description of your intended video, and the app will create a proposed script and walk you through the video creation process. First, it will direct you to InVideo to see the generated video, which includes high-quality, human-like voice narration over carefully selected stock images and internet footage. This integration provides an excellent, simplified experience for effortlessly making interesting, professional-quality videos.

Automating Tasks Using Internal GPT Applications

ChatGPT now provides Custom GPTs, or customized versions of the platform for specific activities or applications. OpenAI maintains a growing list of various GPTs. Some are available via the ChatGPT app, and others are built by users for specific purposes. These GPTs are intended to assist with common activities like scheduling, note-taking, brainstorming, idea generating, content creation, business and data analysis, programming and development, teaching and tutoring, and creative arts.

Productivity GPTs aid with day-to-day tasks such as scheduling and task management, whereas content creation GPTs assist writers, marketers, and creatives in content generation. Business and data analysis GPTs examine and evaluate statistics, collect information on industry trends, and make recommendations for business choices. Programming and development GPTs assist developers with writing code samples, troubleshooting issues, and creating technical documentation.

Education and Tutoring GPTs facilitate learning and teaching in various topics, including arithmetic, science, history, and language learning. Creative Arts GPTs assist artists, designers, and musicians in pursuing artistic interests like design and art conceptions, music and lyrics composing, and food and meal planning. Users can design their GPTs by specifying instructions and uploading appropriate documents or data.

ChatGPT Pros and Cons

The table below summarizes this popular tool’s main pros and cons to help you decide whether it’s the best application for your needs.

| Pros | Cons |

|---|---|

| Free version offers an extensive list of extra GPT applications | Free version can’t access real-time information from the internet |

| Generative content abilities can help speed up day-to-day tasks | Generative content may hallucinate from time to time |

| Customizable generative content is possible through its Customize ChatGPT setting | Lacks emotional empathy for complex situations |

Alternatives to ChatGPT

ChatGPT is among the most popular AI chatbots, but it’s not the only one. Other chatbots, including Claude, Perplexity, and Meta AI, have different strengths and weaknesses that may better meet your needs. The table below shows how they compare at a high level, or read on for more detailed information about each application.

| ChatGPT | Claude | Perplexity | Meta AI | |

|---|---|---|---|---|

| Starting price | • Free • $20 per month |

• Free • $20 per month for Pro • $25 per person/ month for Team |

• Free • $20 per month with one month free |

• Free |

| Real-time access to the internet | Yes (with subscription) | No | Yes | Limited |

| Generate Images | Yes | Yes | No | Yes |

| Respond in a human-like tone | Yes | Yes | Yes | Yes |

| Analyze uploaded images | Yes | Yes | No | No |

Claude

Anthropic’s Claude AI is a large language model that focuses on providing safe, reliable conversational AI and natural language understanding. Claude AI is known for its alignment and safety-first strategy, which strives to reduce harmful or biased answers, making it suitable for sensitive applications and use cases requiring ethical AI interactions. One of Claude’s distinguishing qualities is its capacity to manage long conversations. It has a memory that can retain past conversations and details, making interactions smoother and more consistent over time. It also provides configurable user control, allowing users to tailor Claude’s “personality” to meet their requirements and tastes. Claude AI provides both free and paid options, with the Claude Pro plan beginning at roughly $20 per month. Enterprise pricing is available for organizations requiring tailored solutions.

Perplexity

Perplexity is an AI-powered search-and-answer assistant that provides users with fast, conversational responses to complicated questions. Its real-time access to the internet replicates a search engine but with more context-driven responses. Perplexity AI gives summary information from various reliable sources, allowing users to acquire direct and brief responses without having to go through search engine results. It is beneficial for research and general questions, integrating search engine features with conversational AI to provide a more user-friendly experience. Perplexity provides free and paid plans, with the premium Perplexity Pro starting at $20 monthly. The Pro subscription contains additional features such as quicker reaction times, priority access to new features, and potentially improved accuracy for professional and heavy users.

Meta AI

Meta AI is Meta’s chatbot platform, which includes a wide range of AI models and tools targeted at enhancing both practical AI applications and basic AI research. Meta AI’s solutions include the open-source LLaMA (Large Language Model Meta AI) focused on natural language processing (NLP) and creation. This is intended to work smoothly across Meta’s ecosystem of platforms, including WhatsApp, Facebook, and Instagram. Meta AI’s multimodal features allow it to handle tasks such as image, video, and text generation, making it adaptable and applicable to a wide range of applications. Meta AI is free to use and can be accessed online through its website or Meta’s popular messaging apps such as Messenger, Instagram DMs, and WhatsApp.

How I Evaluated ChatGPT

I placed the highest weights on features, price, and ease of use, followed by integrations, intelligence, and regulatory compliance.

- Core Features (25 Percent): ChatGPT’s core features should suffice for every user. The general purpose of using an AI chatbot is to create and analyze images and generate outputs that sound personalized to each user. I found the feature set to be strong and deep, offering a wide range of capabilities for many common user applications.

- Price (20 Percent): I looked into ChatGPT’s pricing information and whether or not they offer a free trial or free version of the chatbot. Users are more inclined to use AI if it is free or doesn’t cost much for its monthly subscription to access its advanced features.

- Ease of Use (20 Percent): I tested ChatGPT’s web and mobile application versions to see how convenient their navigation is, how quickly they respond to my prompts, and how long their loading time is.

- Integrations (15 Percent): In addition to generating different types of AI content on its own chatbox, ChatGPT also has integrated applications within its interface. I tested a few of these GPT-powered applications, and each is designed for a specific task, such as brainstorming, writing, video and image generator, and more.

- Intelligence (10 Percent): Users are using ChatGPT for research purposes, which is why I added intelligence in this category. I tested ChatGPT’s knowledge base by asking questions related to recent events. As a result, ChatGPT can generate generic answers or information as of its knowledge cut-off date of October 2023.

- Regulatory Compliance (10 Percent): AI chatbots, such as ChatGPT, use large amounts of data for model training. This data may contain personal information, and users must be aware of how their data is being used. I found out that ChatGPT’s compliance with GDPR is complex and not fully established, but its API is complying with DPA for OpenAI’s APIs.

FAQ

ChatGPT is a versatile tool with a wide range of uses in personal, professional, and educational spaces. Users often take advantage of Chatgot to generate blog entries, articles, social media captions, and image and video generation. It can also be used to do general research and build business strategies since it can help brainstorm and simplify complex situations. Many businesses also benefit from ChatGPT since it can write code for a website or application, automate emails, generate customer service chatbots, draft marketing materials, and optimize workflows.

Even though ChatGP is convenient for general research and generating content, it occasionally shows signs of hallucinations. It sometimes needs to be more consistent with its responses, making it difficult to validate the accuracy of its generated information. Social biases included in its training data may be reflected in its outputs, potentially causing harm. Users relying too much on ChatGPT may be unable to think critically and be less likely to double-check facts or apply their judgment when needed.

Understanding what you need from ChatGPT will allow you to know whether you need to pay for its subscription or take advantage of its free version. ChatGPT is worth subscribing to if you need a faster response time, access to OpenAI’s image generator DALL-E, and access to real-time information on the internet. But if you use ChatGPT for its basic features, the free version of ChatGPT is more than enough to help you with anything that you need.

Bottom Line: ChatGPT Excels at General Generative Content Creation and Research

ChatGPT is a great tool for brainstorming, creating generative content, and carrying out research quickly. It is a useful tool for individuals and professionals and even helps writers because of its fast adaptation to a variety of tasks. Users must approach its outputs thoughtfully, verifying facts and guaranteeing authenticity to avoid potential issues such as inaccuracies or inadvertent plagiarism. The best outcomes are achieved by combining ChatGPT’s automation with human contribution, even while it speeds up workflows and fosters creativity. It can be a transformative tool for addressing intellectual and artistic obstacles when used properly.

To learn more about the artificial intelligence tools for help with day-to-day tasks, read our guide to the best generative AI chatbots.

You may like

Noticias

Revivir el compromiso en el aula de español: un desafío musical con chatgpt – enfoque de la facultad

Published

8 meses agoon

6 junio, 2025

A mitad del semestre, no es raro notar un cambio en los niveles de energía de sus alumnos (Baghurst y Kelley, 2013; Kumari et al., 2021). El entusiasmo inicial por aprender un idioma extranjero puede disminuir a medida que otros cursos con tareas exigentes compitan por su atención. Algunos estudiantes priorizan las materias que perciben como más directamente vinculadas a su especialidad o carrera, mientras que otros simplemente sienten el peso del agotamiento de mediados de semestre. En la primavera, los largos meses de invierno pueden aumentar esta fatiga, lo que hace que sea aún más difícil mantener a los estudiantes comprometidos (Rohan y Sigmon, 2000).

Este es el momento en que un instructor de idiomas debe pivotar, cambiando la dinámica del aula para reavivar la curiosidad y la motivación. Aunque los instructores se esfuerzan por incorporar actividades que se adapten a los cinco estilos de aprendizaje preferidos (Felder y Henriques, 1995)-Visual (aprendizaje a través de imágenes y comprensión espacial), auditivo (aprendizaje a través de la escucha y discusión), lectura/escritura (aprendizaje a través de interacción basada en texto), Kinesthetic (aprendizaje a través de movimiento y actividades prácticas) y multimodal (una combinación de múltiples estilos)-its is beneficiales). Estructurado y, después de un tiempo, clases predecibles con actividades que rompen el molde. La introducción de algo inesperado y diferente de la dinámica del aula establecida puede revitalizar a los estudiantes, fomentar la creatividad y mejorar su entusiasmo por el aprendizaje.

La música, en particular, ha sido durante mucho tiempo un aliado de instructores que enseñan un segundo idioma (L2), un idioma aprendido después de la lengua nativa, especialmente desde que el campo hizo la transición hacia un enfoque más comunicativo. Arraigado en la interacción y la aplicación del mundo real, el enfoque comunicativo prioriza el compromiso significativo sobre la memorización de memoria, ayudando a los estudiantes a desarrollar fluidez de formas naturales e inmersivas. La investigación ha destacado constantemente los beneficios de la música en la adquisición de L2, desde mejorar la pronunciación y las habilidades de escucha hasta mejorar la retención de vocabulario y la comprensión cultural (DeGrave, 2019; Kumar et al. 2022; Nuessel y Marshall, 2008; Vidal y Nordgren, 2024).

Sobre la base de esta tradición, la actividad que compartiremos aquí no solo incorpora música sino que también integra inteligencia artificial, agregando una nueva capa de compromiso y pensamiento crítico. Al usar la IA como herramienta en el proceso de aprendizaje, los estudiantes no solo se familiarizan con sus capacidades, sino que también desarrollan la capacidad de evaluar críticamente el contenido que genera. Este enfoque los alienta a reflexionar sobre el lenguaje, el significado y la interpretación mientras participan en el análisis de texto, la escritura creativa, la oratoria y la gamificación, todo dentro de un marco interactivo y culturalmente rico.

Descripción de la actividad: Desafío musical con Chatgpt: “Canta y descubre”

Objetivo:

Los estudiantes mejorarán su comprensión auditiva y su producción escrita en español analizando y recreando letras de canciones con la ayuda de ChatGPT. Si bien las instrucciones se presentan aquí en inglés, la actividad debe realizarse en el idioma de destino, ya sea que se enseñe el español u otro idioma.

Instrucciones:

1. Escuche y decodifique

- Divida la clase en grupos de 2-3 estudiantes.

- Elija una canción en español (por ejemplo, La Llorona por chavela vargas, Oye CÓMO VA por Tito Puente, Vivir mi Vida por Marc Anthony).

- Proporcione a cada grupo una versión incompleta de la letra con palabras faltantes.

- Los estudiantes escuchan la canción y completan los espacios en blanco.

2. Interpretar y discutir

- Dentro de sus grupos, los estudiantes analizan el significado de la canción.

- Discuten lo que creen que transmiten las letras, incluidas las emociones, los temas y cualquier referencia cultural que reconocan.

- Cada grupo comparte su interpretación con la clase.

- ¿Qué crees que la canción está tratando de comunicarse?

- ¿Qué emociones o sentimientos evocan las letras para ti?

- ¿Puedes identificar alguna referencia cultural en la canción? ¿Cómo dan forma a su significado?

- ¿Cómo influye la música (melodía, ritmo, etc.) en su interpretación de la letra?

- Cada grupo comparte su interpretación con la clase.

3. Comparar con chatgpt

- Después de formar su propio análisis, los estudiantes preguntan a Chatgpt:

- ¿Qué crees que la canción está tratando de comunicarse?

- ¿Qué emociones o sentimientos evocan las letras para ti?

- Comparan la interpretación de ChatGPT con sus propias ideas y discuten similitudes o diferencias.

4. Crea tu propio verso

- Cada grupo escribe un nuevo verso que coincide con el estilo y el ritmo de la canción.

- Pueden pedirle ayuda a ChatGPT: “Ayúdanos a escribir un nuevo verso para esta canción con el mismo estilo”.

5. Realizar y cantar

- Cada grupo presenta su nuevo verso a la clase.

- Si se sienten cómodos, pueden cantarlo usando la melodía original.

- Es beneficioso que el profesor tenga una versión de karaoke (instrumental) de la canción disponible para que las letras de los estudiantes se puedan escuchar claramente.

- Mostrar las nuevas letras en un monitor o proyector permite que otros estudiantes sigan y canten juntos, mejorando la experiencia colectiva.

6. Elección – El Grammy va a

Los estudiantes votan por diferentes categorías, incluyendo:

- Mejor adaptación

- Mejor reflexión

- Mejor rendimiento

- Mejor actitud

- Mejor colaboración

7. Reflexión final

- ¿Cuál fue la parte más desafiante de comprender la letra?

- ¿Cómo ayudó ChatGPT a interpretar la canción?

- ¿Qué nuevas palabras o expresiones aprendiste?

Pensamientos finales: música, IA y pensamiento crítico

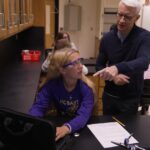

Un desafío musical con Chatgpt: “Canta y descubre” (Desafío Musical Con Chatgpt: “Cantar y Descubrir”) es una actividad que he encontrado que es especialmente efectiva en mis cursos intermedios y avanzados. Lo uso cuando los estudiantes se sienten abrumados o distraídos, a menudo alrededor de los exámenes parciales, como una forma de ayudarlos a relajarse y reconectarse con el material. Sirve como un descanso refrescante, lo que permite a los estudiantes alejarse del estrés de las tareas y reenfocarse de una manera divertida e interactiva. Al incorporar música, creatividad y tecnología, mantenemos a los estudiantes presentes en la clase, incluso cuando todo lo demás parece exigir su atención.

Más allá de ofrecer una pausa bien merecida, esta actividad provoca discusiones atractivas sobre la interpretación del lenguaje, el contexto cultural y el papel de la IA en la educación. A medida que los estudiantes comparan sus propias interpretaciones de las letras de las canciones con las generadas por ChatGPT, comienzan a reconocer tanto el valor como las limitaciones de la IA. Estas ideas fomentan el pensamiento crítico, ayudándoles a desarrollar un enfoque más maduro de la tecnología y su impacto en su aprendizaje.

Agregar el elemento de karaoke mejora aún más la experiencia, dando a los estudiantes la oportunidad de realizar sus nuevos versos y divertirse mientras practica sus habilidades lingüísticas. Mostrar la letra en una pantalla hace que la actividad sea más inclusiva, lo que permite a todos seguirlo. Para hacerlo aún más agradable, seleccionando canciones que resuenen con los gustos de los estudiantes, ya sea un clásico como La Llorona O un éxito contemporáneo de artistas como Bad Bunny, Selena, Daddy Yankee o Karol G, hace que la actividad se sienta más personal y atractiva.

Esta actividad no se limita solo al aula. Es una gran adición a los clubes españoles o eventos especiales, donde los estudiantes pueden unirse a un amor compartido por la música mientras practican sus habilidades lingüísticas. Después de todo, ¿quién no disfruta de una buena parodia de su canción favorita?

Mezclar el aprendizaje de idiomas con música y tecnología, Desafío Musical Con Chatgpt Crea un entorno dinámico e interactivo que revitaliza a los estudiantes y profundiza su conexión con el lenguaje y el papel evolutivo de la IA. Convierte los momentos de agotamiento en oportunidades de creatividad, exploración cultural y entusiasmo renovado por el aprendizaje.

Angela Rodríguez Mooney, PhD, es profesora asistente de español y la Universidad de Mujeres de Texas.

Referencias

Baghurst, Timothy y Betty C. Kelley. “Un examen del estrés en los estudiantes universitarios en el transcurso de un semestre”. Práctica de promoción de la salud 15, no. 3 (2014): 438-447.

DeGrave, Pauline. “Música en el aula de idiomas extranjeros: cómo y por qué”. Revista de Enseñanza e Investigación de Lenguas 10, no. 3 (2019): 412-420.

Felder, Richard M. y Eunice R. Henriques. “Estilos de aprendizaje y enseñanza en la educación extranjera y de segundo idioma”. Anales de idiomas extranjeros 28, no. 1 (1995): 21-31.

Nuessel, Frank y April D. Marshall. “Prácticas y principios para involucrar a los tres modos comunicativos en español a través de canciones y música”. Hispania (2008): 139-146.

Kumar, Tribhuwan, Shamim Akhter, Mehrunnisa M. Yunus y Atefeh Shamsy. “Uso de la música y las canciones como herramientas pedagógicas en la enseñanza del inglés como contextos de idiomas extranjeros”. Education Research International 2022, no. 1 (2022): 1-9

Noticias

5 indicaciones de chatgpt que pueden ayudar a los adolescentes a lanzar una startup

Published

8 meses agoon

5 junio, 2025

Teen emprendedor que usa chatgpt para ayudarlo con su negocio

El emprendimiento adolescente sigue en aumento. Según Junior Achievement Research, el 66% de los adolescentes estadounidenses de entre 13 y 17 años dicen que es probable que considere comenzar un negocio como adultos, con el monitor de emprendimiento global 2023-2024 que encuentra que el 24% de los jóvenes de 18 a 24 años son actualmente empresarios. Estos jóvenes fundadores no son solo soñando, están construyendo empresas reales que generan ingresos y crean un impacto social, y están utilizando las indicaciones de ChatGPT para ayudarlos.

En Wit (lo que sea necesario), la organización que fundó en 2009, hemos trabajado con más de 10,000 jóvenes empresarios. Durante el año pasado, he observado un cambio en cómo los adolescentes abordan la planificación comercial. Con nuestra orientación, están utilizando herramientas de IA como ChatGPT, no como atajos, sino como socios de pensamiento estratégico para aclarar ideas, probar conceptos y acelerar la ejecución.

Los emprendedores adolescentes más exitosos han descubierto indicaciones específicas que los ayudan a pasar de una idea a otra. Estas no son sesiones genéricas de lluvia de ideas: están utilizando preguntas específicas que abordan los desafíos únicos que enfrentan los jóvenes fundadores: recursos limitados, compromisos escolares y la necesidad de demostrar sus conceptos rápidamente.

Aquí hay cinco indicaciones de ChatGPT que ayudan constantemente a los emprendedores adolescentes a construir negocios que importan.

1. El problema del primer descubrimiento chatgpt aviso

“Me doy cuenta de que [specific group of people]

luchar contra [specific problem I’ve observed]. Ayúdame a entender mejor este problema explicando: 1) por qué existe este problema, 2) qué soluciones existen actualmente y por qué son insuficientes, 3) cuánto las personas podrían pagar para resolver esto, y 4) tres formas específicas en que podría probar si este es un problema real que vale la pena resolver “.

Un adolescente podría usar este aviso después de notar que los estudiantes en la escuela luchan por pagar el almuerzo. En lugar de asumir que entienden el alcance completo, podrían pedirle a ChatGPT que investigue la deuda del almuerzo escolar como un problema sistémico. Esta investigación puede llevarlos a crear un negocio basado en productos donde los ingresos ayuden a pagar la deuda del almuerzo, lo que combina ganancias con el propósito.

Los adolescentes notan problemas de manera diferente a los adultos porque experimentan frustraciones únicas, desde los desafíos de las organizaciones escolares hasta las redes sociales hasta las preocupaciones ambientales. Según la investigación de Square sobre empresarios de la Generación de la Generación Z, el 84% planea ser dueños de negocios dentro de cinco años, lo que los convierte en candidatos ideales para las empresas de resolución de problemas.

2. El aviso de chatgpt de chatgpt de chatgpt de realidad de la realidad del recurso

“Soy [age] años con aproximadamente [dollar amount] invertir y [number] Horas por semana disponibles entre la escuela y otros compromisos. Según estas limitaciones, ¿cuáles son tres modelos de negocio que podría lanzar de manera realista este verano? Para cada opción, incluya costos de inicio, requisitos de tiempo y los primeros tres pasos para comenzar “.

Este aviso se dirige al elefante en la sala: la mayoría de los empresarios adolescentes tienen dinero y tiempo limitados. Cuando un empresario de 16 años emplea este enfoque para evaluar un concepto de negocio de tarjetas de felicitación, puede descubrir que pueden comenzar con $ 200 y escalar gradualmente. Al ser realistas sobre las limitaciones por adelantado, evitan el exceso de compromiso y pueden construir hacia objetivos de ingresos sostenibles.

Según el informe de Gen Z de Square, el 45% de los jóvenes empresarios usan sus ahorros para iniciar negocios, con el 80% de lanzamiento en línea o con un componente móvil. Estos datos respaldan la efectividad de la planificación basada en restricciones: cuando funcionan los adolescentes dentro de las limitaciones realistas, crean modelos comerciales más sostenibles.

3. El aviso de chatgpt del simulador de voz del cliente

“Actúa como un [specific demographic] Y dame comentarios honestos sobre esta idea de negocio: [describe your concept]. ¿Qué te excitaría de esto? ¿Qué preocupaciones tendrías? ¿Cuánto pagarías de manera realista? ¿Qué necesitaría cambiar para que se convierta en un cliente? “

Los empresarios adolescentes a menudo luchan con la investigación de los clientes porque no pueden encuestar fácilmente a grandes grupos o contratar firmas de investigación de mercado. Este aviso ayuda a simular los comentarios de los clientes haciendo que ChatGPT adopte personas específicas.

Un adolescente que desarrolla un podcast para atletas adolescentes podría usar este enfoque pidiéndole a ChatGPT que responda a diferentes tipos de atletas adolescentes. Esto ayuda a identificar temas de contenido que resuenan y mensajes que se sienten auténticos para el público objetivo.

El aviso funciona mejor cuando se vuelve específico sobre la demografía, los puntos débiles y los contextos. “Actúa como un estudiante de último año de secundaria que solicita a la universidad” produce mejores ideas que “actuar como un adolescente”.

4. El mensaje mínimo de diseñador de prueba viable chatgpt

“Quiero probar esta idea de negocio: [describe concept] sin gastar más de [budget amount] o más de [time commitment]. Diseñe tres experimentos simples que podría ejecutar esta semana para validar la demanda de los clientes. Para cada prueba, explique lo que aprendería, cómo medir el éxito y qué resultados indicarían que debería avanzar “.

Este aviso ayuda a los adolescentes a adoptar la metodología Lean Startup sin perderse en la jerga comercial. El enfoque en “This Week” crea urgencia y evita la planificación interminable sin acción.

Un adolescente que desea probar un concepto de línea de ropa podría usar este indicador para diseñar experimentos de validación simples, como publicar maquetas de diseño en las redes sociales para evaluar el interés, crear un formulario de Google para recolectar pedidos anticipados y pedirles a los amigos que compartan el concepto con sus redes. Estas pruebas no cuestan nada más que proporcionar datos cruciales sobre la demanda y los precios.

5. El aviso de chatgpt del generador de claridad de tono

“Convierta esta idea de negocio en una clara explicación de 60 segundos: [describe your business]. La explicación debe incluir: el problema que resuelve, su solución, a quién ayuda, por qué lo elegirían sobre las alternativas y cómo se ve el éxito. Escríbelo en lenguaje de conversación que un adolescente realmente usaría “.

La comunicación clara separa a los empresarios exitosos de aquellos con buenas ideas pero una ejecución deficiente. Este aviso ayuda a los adolescentes a destilar conceptos complejos a explicaciones convincentes que pueden usar en todas partes, desde las publicaciones en las redes sociales hasta las conversaciones con posibles mentores.

El énfasis en el “lenguaje de conversación que un adolescente realmente usaría” es importante. Muchas plantillas de lanzamiento comercial suenan artificiales cuando se entregan jóvenes fundadores. La autenticidad es más importante que la jerga corporativa.

Más allá de las indicaciones de chatgpt: estrategia de implementación

La diferencia entre los adolescentes que usan estas indicaciones de manera efectiva y aquellos que no se reducen a seguir. ChatGPT proporciona dirección, pero la acción crea resultados.

Los jóvenes empresarios más exitosos con los que trabajo usan estas indicaciones como puntos de partida, no de punto final. Toman las sugerencias generadas por IA e inmediatamente las prueban en el mundo real. Llaman a clientes potenciales, crean prototipos simples e iteran en función de los comentarios reales.

Investigaciones recientes de Junior Achievement muestran que el 69% de los adolescentes tienen ideas de negocios, pero se sienten inciertos sobre el proceso de partida, con el miedo a que el fracaso sea la principal preocupación para el 67% de los posibles empresarios adolescentes. Estas indicaciones abordan esa incertidumbre al desactivar los conceptos abstractos en los próximos pasos concretos.

La imagen más grande

Los emprendedores adolescentes que utilizan herramientas de IA como ChatGPT representan un cambio en cómo está ocurriendo la educación empresarial. Según la investigación mundial de monitores empresariales, los jóvenes empresarios tienen 1,6 veces más probabilidades que los adultos de querer comenzar un negocio, y son particularmente activos en la tecnología, la alimentación y las bebidas, la moda y los sectores de entretenimiento. En lugar de esperar clases de emprendimiento formales o programas de MBA, estos jóvenes fundadores están accediendo a herramientas de pensamiento estratégico de inmediato.

Esta tendencia se alinea con cambios más amplios en la educación y la fuerza laboral. El Foro Económico Mundial identifica la creatividad, el pensamiento crítico y la resiliencia como las principales habilidades para 2025, la capacidad de las capacidades que el espíritu empresarial desarrolla naturalmente.

Programas como WIT brindan soporte estructurado para este viaje, pero las herramientas en sí mismas se están volviendo cada vez más accesibles. Un adolescente con acceso a Internet ahora puede acceder a recursos de planificación empresarial que anteriormente estaban disponibles solo para empresarios establecidos con presupuestos significativos.

La clave es usar estas herramientas cuidadosamente. ChatGPT puede acelerar el pensamiento y proporcionar marcos, pero no puede reemplazar el arduo trabajo de construir relaciones, crear productos y servir a los clientes. La mejor idea de negocio no es la más original, es la que resuelve un problema real para personas reales. Las herramientas de IA pueden ayudar a identificar esas oportunidades, pero solo la acción puede convertirlos en empresas que importan.

Noticias

Chatgpt vs. gemini: he probado ambos, y uno definitivamente es mejor

Published

8 meses agoon

5 junio, 2025

Precio

ChatGPT y Gemini tienen versiones gratuitas que limitan su acceso a características y modelos. Los planes premium para ambos también comienzan en alrededor de $ 20 por mes. Las características de chatbot, como investigaciones profundas, generación de imágenes y videos, búsqueda web y más, son similares en ChatGPT y Gemini. Sin embargo, los planes de Gemini pagados también incluyen el almacenamiento en la nube de Google Drive (a partir de 2TB) y un conjunto robusto de integraciones en las aplicaciones de Google Workspace.

Los niveles de más alta gama de ChatGPT y Gemini desbloquean el aumento de los límites de uso y algunas características únicas, pero el costo mensual prohibitivo de estos planes (como $ 200 para Chatgpt Pro o $ 250 para Gemini Ai Ultra) los pone fuera del alcance de la mayoría de las personas. Las características específicas del plan Pro de ChatGPT, como el modo O1 Pro que aprovecha el poder de cálculo adicional para preguntas particularmente complicadas, no son especialmente relevantes para el consumidor promedio, por lo que no sentirá que se está perdiendo. Sin embargo, es probable que desee las características que son exclusivas del plan Ai Ultra de Gemini, como la generación de videos VEO 3.

Ganador: Géminis

Plataformas

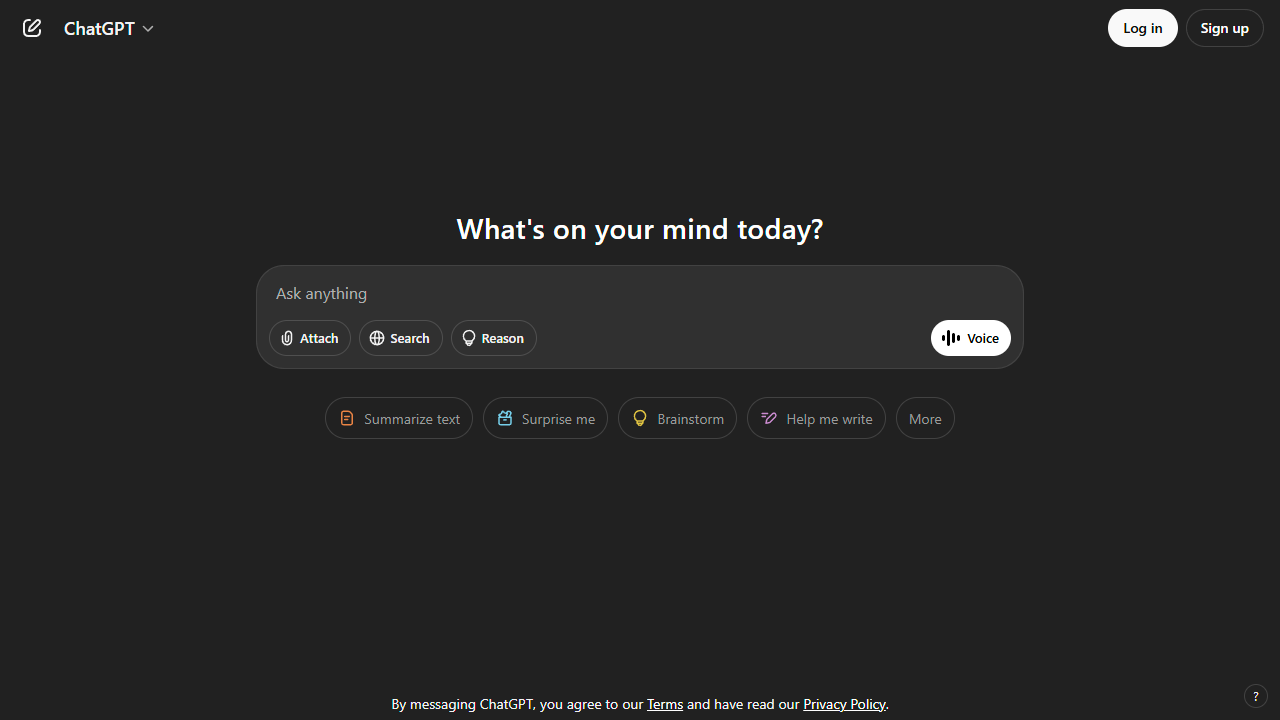

Puede acceder a ChatGPT y Gemini en la web o a través de aplicaciones móviles (Android e iOS). ChatGPT también tiene aplicaciones de escritorio (macOS y Windows) y una extensión oficial para Google Chrome. Gemini no tiene aplicaciones de escritorio dedicadas o una extensión de Chrome, aunque se integra directamente con el navegador.

(Crédito: OpenAI/PCMAG)

Chatgpt está disponible en otros lugares, Como a través de Siri. Como se mencionó, puede acceder a Gemini en las aplicaciones de Google, como el calendario, Documento, ConducirGmail, Mapas, Mantener, FotosSábanas, y Música de YouTube. Tanto los modelos de Chatgpt como Gemini también aparecen en sitios como la perplejidad. Sin embargo, obtiene la mayor cantidad de funciones de estos chatbots en sus aplicaciones y portales web dedicados.

Las interfaces de ambos chatbots son en gran medida consistentes en todas las plataformas. Son fáciles de usar y no lo abruman con opciones y alternar. ChatGPT tiene algunas configuraciones más para jugar, como la capacidad de ajustar su personalidad, mientras que la profunda interfaz de investigación de Gemini hace un mejor uso de los bienes inmuebles de pantalla.

Ganador: empate

Modelos de IA

ChatGPT tiene dos series primarias de modelos, la serie 4 (su línea de conversación, insignia) y la Serie O (su compleja línea de razonamiento). Gemini ofrece de manera similar una serie Flash de uso general y una serie Pro para tareas más complicadas.

Los últimos modelos de Chatgpt son O3 y O4-Mini, y los últimos de Gemini son 2.5 Flash y 2.5 Pro. Fuera de la codificación o la resolución de una ecuación, pasará la mayor parte de su tiempo usando los modelos de la serie 4-Series y Flash. A continuación, puede ver cómo funcionan estos modelos en una variedad de tareas. Qué modelo es mejor depende realmente de lo que quieras hacer.

Ganador: empate

Búsqueda web

ChatGPT y Gemini pueden buscar información actualizada en la web con facilidad. Sin embargo, ChatGPT presenta mosaicos de artículos en la parte inferior de sus respuestas para una lectura adicional, tiene un excelente abastecimiento que facilita la vinculación de reclamos con evidencia, incluye imágenes en las respuestas cuando es relevante y, a menudo, proporciona más detalles en respuesta. Gemini no muestra nombres de fuente y títulos de artículos completos, e incluye mosaicos e imágenes de artículos solo cuando usa el modo AI de Google. El abastecimiento en este modo es aún menos robusto; Google relega las fuentes a los caretes que se pueden hacer clic que no resaltan las partes relevantes de su respuesta.

Como parte de sus experiencias de búsqueda en la web, ChatGPT y Gemini pueden ayudarlo a comprar. Si solicita consejos de compra, ambos presentan mosaicos haciendo clic en enlaces a los minoristas. Sin embargo, Gemini generalmente sugiere mejores productos y tiene una característica única en la que puede cargar una imagen tuya para probar digitalmente la ropa antes de comprar.

Ganador: chatgpt

Investigación profunda

ChatGPT y Gemini pueden generar informes que tienen docenas de páginas e incluyen más de 50 fuentes sobre cualquier tema. La mayor diferencia entre los dos se reduce al abastecimiento. Gemini a menudo cita más fuentes que CHATGPT, pero maneja el abastecimiento en informes de investigación profunda de la misma manera que lo hace en la búsqueda en modo AI, lo que significa caretas que se puede hacer clic sin destacados en el texto. Debido a que es más difícil conectar las afirmaciones en los informes de Géminis a fuentes reales, es más difícil creerles. El abastecimiento claro de ChatGPT con destacados en el texto es más fácil de confiar. Sin embargo, Gemini tiene algunas características de calidad de vida en ChatGPT, como la capacidad de exportar informes formateados correctamente a Google Docs con un solo clic. Su tono también es diferente. Los informes de ChatGPT se leen como publicaciones de foro elaboradas, mientras que los informes de Gemini se leen como documentos académicos.

Ganador: chatgpt

Generación de imágenes

La generación de imágenes de ChatGPT impresiona independientemente de lo que solicite, incluso las indicaciones complejas para paneles o diagramas cómicos. No es perfecto, pero los errores y la distorsión son mínimos. Gemini genera imágenes visualmente atractivas más rápido que ChatGPT, pero rutinariamente incluyen errores y distorsión notables. Con indicaciones complicadas, especialmente diagramas, Gemini produjo resultados sin sentido en las pruebas.

Arriba, puede ver cómo ChatGPT (primera diapositiva) y Géminis (segunda diapositiva) les fue con el siguiente mensaje: “Genere una imagen de un estudio de moda con una decoración simple y rústica que contrasta con el espacio más agradable. Incluya un sofá marrón y paredes de ladrillo”. La imagen de ChatGPT limita los problemas al detalle fino en las hojas de sus plantas y texto en su libro, mientras que la imagen de Gemini muestra problemas más notables en su tubo de cordón y lámpara.

Ganador: chatgpt

¡Obtenga nuestras mejores historias!

Toda la última tecnología, probada por nuestros expertos

Regístrese en el boletín de informes de laboratorio para recibir las últimas revisiones de productos de PCMAG, comprar asesoramiento e ideas.

Al hacer clic en Registrarme, confirma que tiene más de 16 años y acepta nuestros Términos de uso y Política de privacidad.

¡Gracias por registrarse!

Su suscripción ha sido confirmada. ¡Esté atento a su bandeja de entrada!

Generación de videos

La generación de videos de Gemini es la mejor de su clase, especialmente porque ChatGPT no puede igualar su capacidad para producir audio acompañante. Actualmente, Google bloquea el último modelo de generación de videos de Gemini, VEO 3, detrás del costoso plan AI Ultra, pero obtienes más videos realistas que con ChatGPT. Gemini también tiene otras características que ChatGPT no, como la herramienta Flow Filmmaker, que le permite extender los clips generados y el animador AI Whisk, que le permite animar imágenes fijas. Sin embargo, tenga en cuenta que incluso con VEO 3, aún necesita generar videos varias veces para obtener un gran resultado.

En el ejemplo anterior, solicité a ChatGPT y Gemini a mostrarme un solucionador de cubos de Rubik Rubik que resuelva un cubo. La persona en el video de Géminis se ve muy bien, y el audio acompañante es competente. Al final, hay una buena atención al detalle con el marco que se desplaza, simulando la detención de una grabación de selfies. Mientras tanto, Chatgpt luchó con su cubo, distorsionándolo en gran medida.

Ganador: Géminis

Procesamiento de archivos

Comprender los archivos es una fortaleza de ChatGPT y Gemini. Ya sea que desee que respondan preguntas sobre un manual, editen un currículum o le informen algo sobre una imagen, ninguno decepciona. Sin embargo, ChatGPT tiene la ventaja sobre Gemini, ya que ofrece un reconocimiento de imagen ligeramente mejor y respuestas más detalladas cuando pregunta sobre los archivos cargados. Ambos chatbots todavía a veces inventan citas de documentos proporcionados o malinterpretan las imágenes, así que asegúrese de verificar sus resultados.

Ganador: chatgpt

Escritura creativa

Chatgpt y Gemini pueden generar poemas, obras, historias y más competentes. CHATGPT, sin embargo, se destaca entre los dos debido a cuán únicas son sus respuestas y qué tan bien responde a las indicaciones. Las respuestas de Gemini pueden sentirse repetitivas si no calibra cuidadosamente sus solicitudes, y no siempre sigue todas las instrucciones a la carta.

En el ejemplo anterior, solicité ChatGPT (primera diapositiva) y Gemini (segunda diapositiva) con lo siguiente: “Sin hacer referencia a nada en su memoria o respuestas anteriores, quiero que me escriba un poema de verso gratuito. Preste atención especial a la capitalización, enjambment, ruptura de línea y puntuación. Dado que es un verso libre, no quiero un medidor familiar o un esquema de retiro de la rima, pero quiero que tenga un estilo de coohes. ChatGPT logró entregar lo que pedí en el aviso, y eso era distinto de las generaciones anteriores. Gemini tuvo problemas para generar un poema que incorporó cualquier cosa más allá de las comas y los períodos, y su poema anterior se lee de manera muy similar a un poema que generó antes.

Recomendado por nuestros editores

Ganador: chatgpt

Razonamiento complejo

Los modelos de razonamiento complejos de Chatgpt y Gemini pueden manejar preguntas de informática, matemáticas y física con facilidad, así como mostrar de manera competente su trabajo. En las pruebas, ChatGPT dio respuestas correctas un poco más a menudo que Gemini, pero su rendimiento es bastante similar. Ambos chatbots pueden y le darán respuestas incorrectas, por lo que verificar su trabajo aún es vital si está haciendo algo importante o tratando de aprender un concepto.

Ganador: chatgpt

Integración

ChatGPT no tiene integraciones significativas, mientras que las integraciones de Gemini son una característica definitoria. Ya sea que desee obtener ayuda para editar un ensayo en Google Docs, comparta una pestaña Chrome para hacer una pregunta, pruebe una nueva lista de reproducción de música de YouTube personalizada para su gusto o desbloquee ideas personales en Gmail, Gemini puede hacer todo y mucho más. Es difícil subestimar cuán integrales y poderosas son realmente las integraciones de Géminis.

Ganador: Géminis

Asistentes de IA

ChatGPT tiene GPT personalizados, y Gemini tiene gemas. Ambos son asistentes de IA personalizables. Tampoco es una gran actualización sobre hablar directamente con los chatbots, pero los GPT personalizados de terceros agregan una nueva funcionalidad, como el fácil acceso a Canva para editar imágenes generadas. Mientras tanto, terceros no pueden crear gemas, y no puedes compartirlas. Puede permitir que los GPT personalizados accedan a la información externa o tomen acciones externas, pero las GEM no tienen una funcionalidad similar.

Ganador: chatgpt

Contexto Windows y límites de uso

La ventana de contexto de ChatGPT sube a 128,000 tokens en sus planes de nivel superior, y todos los planes tienen límites de uso dinámicos basados en la carga del servidor. Géminis, por otro lado, tiene una ventana de contexto de 1,000,000 token. Google no está demasiado claro en los límites de uso exactos para Gemini, pero también son dinámicos dependiendo de la carga del servidor. Anecdóticamente, no pude alcanzar los límites de uso usando los planes pagados de Chatgpt o Gemini, pero es mucho más fácil hacerlo con los planes gratuitos.

Ganador: Géminis

Privacidad

La privacidad en Chatgpt y Gemini es una bolsa mixta. Ambos recopilan cantidades significativas de datos, incluidos todos sus chats, y usan esos datos para capacitar a sus modelos de IA de forma predeterminada. Sin embargo, ambos le dan la opción de apagar el entrenamiento. Google al menos no recopila y usa datos de Gemini para fines de capacitación en aplicaciones de espacio de trabajo, como Gmail, de forma predeterminada. ChatGPT y Gemini también prometen no vender sus datos o usarlos para la orientación de anuncios, pero Google y OpenAI tienen historias sórdidas cuando se trata de hacks, filtraciones y diversos fechorías digitales, por lo que recomiendo no compartir nada demasiado sensible.

Ganador: empate

Related posts

Trending

-

Startups2 años ago

Startups2 años agoRemove.bg: La Revolución en la Edición de Imágenes que Debes Conocer

-

Tutoriales2 años ago

Tutoriales2 años agoCómo Comenzar a Utilizar ChatGPT: Una Guía Completa para Principiantes

-

Startups2 años ago

Startups2 años agoStartups de IA en EE.UU. que han recaudado más de $100M en 2024

-

Startups2 años ago

Startups2 años agoDeepgram: Revolucionando el Reconocimiento de Voz con IA

-

Recursos2 años ago

Recursos2 años agoCómo Empezar con Popai.pro: Tu Espacio Personal de IA – Guía Completa, Instalación, Versiones y Precios

-

Recursos2 años ago

Recursos2 años agoPerplexity aplicado al Marketing Digital y Estrategias SEO

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial Aplicada de 4Geeks Academy 2024

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial de UC Berkeley estratégico para negocios