Noticias

OpenAI CEO’s Plans for ChatGPT, Fusion and Trump

Photo illustration by Danielle Del Plato for Bloomberg Businessweek; Background illustration: Chuck Anderson/Krea, Photo: Bloomberg

Businessweek | The Big Take

An interview with the OpenAI co-founder.

On Nov. 30, 2022, traffic to OpenAI’s website peaked at a number a little north of zero. It was a startup so small and sleepy that the owners didn’t bother tracking their web traffic. It was a quiet day, the last the company would ever know. Within two months, OpenAI was being pounded by more than 100 million visitors trying, and freaking out about, ChatGPT. Nothing has been the same for anyone since, particularly Sam Altman. In his most wide-ranging interview as chief executive officer, Altman explains his infamous four-day firing, how he actually runs OpenAI, his plans for the Trump-Musk presidency and his relentless pursuit of artificial general intelligence—the still-theoretical next phase of AI, in which machines will be capable of performing any intellectual task a human can do. Edited for clarity and length.

Your team suggested this would be a good moment to review the past two years, reflect on some events and decisions, to clarify a few things. But before we do that, can you tell the story of OpenAI’s founding dinner again? Because it seems like the historic value of that event increases by the day.

Everyone wants a neat story where there’s one moment when a thing happened. Conservatively, I would say there were 20 founding dinners that year [2015], and then one ends up being entered into the canon, and everyone talks about that. The most important one to me personally was Ilya 1 and I at the Counter in Mountain View [California]. Just the two of us.

1 Ilya Sutskever is an OpenAI co-founder and one of the leading researchers in the field of artificial intelligence. As a board member he participated in Altman’s November 2023 firing, only to express public regret over his decision a few days later. He departed OpenAI in May 2024.

And to rewind even back from that, I was always really interested in AI. I had studied it as an undergrad. I got distracted for a while, and then 2012 comes along. Ilya and others do AlexNet. 2 I keep watching the progress, and I’m like, “Man, deep learning seems real. Also, it seems like it scales. That’s a big, big deal. Someone should do something.”

2 AlexNet, created by Alex Krizhevsky, Sutskever and Geoffrey Hinton, used a deep convolutional neural network (CNN)—a powerful new type of computer program—to recognize images far more accurately than ever, kick-starting major progress in AI.

So I started meeting a bunch of people, asking who would be good to do this with. It’s impossible to overstate how nonmainstream AGI was in 2014. People were afraid to talk to me, because I was saying I wanted to start an AGI effort. It was, like, cancelable. It could ruin your career. But a lot of people said there’s one person you really gotta talk to, and that was Ilya. So I stalked Ilya at a conference, got him in the hallway, and we talked. I was like, “This is a smart guy.” I kind of told him what I was thinking, and we agreed we’d meet up for a dinner. At our first dinner, he articulated—not in the same words he’d use now—but basically articulated our strategy for how to build AGI.

What from the spirit of that dinner remains in the company today?

Kind of all of it. There’s additional things on top of it, but this idea that we believed in deep learning, we believed in a particular technical approach to get there and a way to do research and engineering together—it’s incredible to me how well that’s worked. Usually when you have these ideas, they don’t quite work, and there were clearly some things about our original conception that didn’t work at all. Structure. 3 All of that. But [believing] AGI was possible, that this was the approach to bet on, and if it were possible it would be a big deal to society? That’s been remarkably true.

3 OpenAI was founded in 2015 as a nonprofit with the mission to ensure that AGI benefits all of humanity. This would become, er, problematic. We’ll get to it.

One of the strengths of that original OpenAI group was recruiting. Somehow you managed to corner the market on a ton of the top AI research talent, often with much less money to offer than your competitors. What was the pitch?

The pitch was just come build AGI. And the reason it worked—I cannot overstate how heretical it was at the time to say we’re gonna build AGI. So you filter out 99% of the world, and you only get the really talented, original thinkers. And that’s really powerful. If you’re doing the same thing everybody else is doing, if you’re building, like, the 10,000th photo-sharing app? Really hard to recruit talent. Convince me no one else is doing it, and appeal to a small, really talented set? You can get them all. And they all wanna work together. So we had what at the time sounded like an audacious or maybe outlandish pitch, and it pushed away all of the senior experts in the field, and we got the ragtag, young, talented people who are good to start with.

How quickly did you guys settle into roles?

Most people were working on it full time. I had a job, 4 so at the beginning I was doing very little, and then over time I fell more and more in love with it. And then, by 2018, I had drunk the Kool-Aid. But it was like a Band of Brothers approach for a while. Ilya and Greg 5 were kind of running it, but everybody was doing their thing.

4 In 2014, Altman became the CEO of Y Combinator, the startup accelerator that helped launch Airbnb, Dropbox and Stripe, among others.

5 Greg Brockman is a co-founder of OpenAI and its current president.

It seems like you’ve got a romantic view of those first couple of years.

Well, those are the most fun times of OpenAI history for sure. I mean, it’s fun now, too, but to have been in the room for what I think will turn out to be one of the greatest periods of scientific discovery, relative to the impact it has on the world, of all time? That’s a once-in-a-lifetime experience. If you’re very lucky. If you’re extremely lucky.

In 2019 you took over as CEO. How did that come about?

I was trying to do OpenAI and [Y Combinator] at the same time, which was really hard. I just got transfixed by this idea that we were actually going to build AGI. Funnily enough, I remember thinking to myself back then that we would do it in 2025, but it was a totally random number based off of 10 years from when we started. People used to joke in those days that the only thing I would do was walk into a meeting and say, “Scale it up!” Which is not true, but that was kind of the thrust of that time period.

The official release date of ChatGPT is Nov. 30, 2022. Does that feel like a million years ago or a week ago?

[Laughs] I turn 40 next year. On my 30th birthday, I wrote this blog post, and the title of it was “The days are long but the decades are short.” Somebody this morning emailed me and said, “This is my favorite blog post, I read it every year. When you turn 40, will you write an update?” I’m laughing because I’m definitely not gonna write an update. I have no time. But if I did, the title would be “The days are long, and the decades are also f—ing very long.” So it has felt like a very long time.

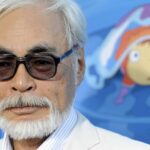

OpenAI senior executives at the company’s headquarters in San Francisco on March 13, 2023, from left: Sam Altman, chief executive officer; Mira Murati, chief technology officer; Greg Brockman, president; and Ilya Sutskever, chief scientist. Photographer: Jim Wilson/The New York Times

As that first cascade of users started showing up, and it was clear this was going to be a colossal thing, did you have a “holy s—” moment?

So, OK, a couple of things. No. 1, I thought it was gonna do pretty well! The rest of the company was like, “Why are you making us launch this? It’s a bad decision. It’s not ready.” I don’t make a lot of “we’re gonna do this thing” decisions, but this was one of them.

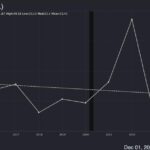

YC has this famous graph that PG 6 used to draw, where you have the squiggles of potential, and then the wearing off of novelty, and then this long dip, and then the squiggles of real product market fit. And then eventually it takes off. It’s a piece of YC lore. In the first few days, as [ChatGPT] was doing its thing, it’d be more usage during the day and less at night. The team was like, “Ha ha ha, it’s falling off.” But I had learned one thing during YC, which is, if every time there’s a new trough it’s above the previous peak, there’s something very different going on. It looked like that in the first five days, and I was like, “I think we have something on our hands that we do not appreciate here.”

6 Paul Graham, the co-founder of Y Combinator and a philosopher king-type on the subject of startups and technology.

And that started off a mad scramble to get a lot of compute 7—which we did not have at the time—because we had launched this with no business model or thoughts for a business model. I remember a meeting that December where I sort of said, “I’ll consider any idea for how we’re going to pay for this, but we can’t go on.” And there were some truly horrible ideas—and no good ones. So we just said, “Fine, we’re just gonna try a subscription, and we’ll figure it out later.” That just stuck. We launched with GPT-3.5, and we knew we had GPT-4 [coming], so we knew that it was going to be better. And as I started talking to people who were using it about the things they were using it for, I was like, “I know we can make these better, too.” We kept improving it pretty rapidly, and that led to this global media consciousness [moment], whatever you want to call it.

7 In AI, “compute” is commonly used as a noun, referring to the processing power and resources—such as central processing units (CPUs), graphics processing units (GPUs) and tensor processing units (TPUs)—required to train, run or develop machine-learning models. Want to know how Nvidia Corp.’s Jensen Huang got rich? Compute.

Are you a person who enjoys success? Were you able to take it in, or were you already worried about the next phase of scaling?

A very strange thing about me, or my career: The normal arc is you run a big, successful company, and then in your 50s or 60s you get tired of working that hard, and you become a [venture capitalist]. It’s very unusual to have been a VC first and have had a pretty long VC career and then run a company. And there are all these ways in which I think it’s bad, but one way in which it has been very good for me is you have the weird benefit of knowing what’s gonna happen to you, because you’ve watched and advised a bunch of other people through it. And I knew I was both overwhelmed with gratitude and, like, “F—, I’m gonna get strapped to a rocket ship, and my life is gonna be totally different and not that fun.” I had a lot of gallows humor about it. My husband 8 tells funny stories from that period of how I would come home, and he’d be like, “This is so great!” And I was like, “This is just really bad. It’s bad for you, too. You just don’t realize it yet, but it’s really bad.” [Laughs]

8 Altman married longtime partner Oliver Mulherin, an Australian software engineer, in early 2024. They’re expecting a child in March 2025.

You’ve been Silicon Valley famous for a long time, but one consequence of GPT’s arrival is that you became world famous with the kind of speed that’s usually associated with, like, Sabrina Carpenter or Timothée Chalamet. Did that complicate your ability to manage a workforce?

It complicated my ability to live my life. But in the company, you can be a well-known CEO or not, people are just like, “Where’s my f—ing GPUs?”

I feel that distance in all the rest of my life, and it’s a really strange thing. I feel that when I’m with old friends, new friends—anyone but the people very closest to me. I guess I do feel it at work if I’m with people I don’t normally interact with. If I have to go to one meeting with a group that I almost never meet with, I can kind of tell it’s there. But I spend most of my time with the researchers, and man, I promise you, come with me to the research meeting right after this, and you will see nothing but disrespect. Which is great.

Do you remember the first moment you had an inkling that a for-profit company with billions in outside investment reporting up to a nonprofit board might be a problem?

There must have been a lot of moments. But that year was such an insane blur, from November of 2022 to November of 2023, I barely remember it. It literally felt like we built out an entire company from almost scratch in 12 months, and we did it in crazy public. One of my learnings, looking back, is everybody says they’re not going to screw up the relative ranking of important versus urgent, 9 and everybody gets tricked by urgent. So I would say the first moment when I was coldly staring at reality in the face—that this was not going to work—was about 12:05 p.m. on whatever that Friday afternoon was. 10

9 Dwight Eisenhower apparently said “What is important is seldom urgent, and what is urgent is seldom important” so often that it gave birth to the Eisenhower Matrix, a time management tool that splits tasks into four quadrants:

- Urgent and important: Tasks to be done immediately.

- Important but not urgent: Tasks to be scheduled for later.

- Urgent but not important: Tasks to be delegated.

- Not urgent and not important: Tasks to be eliminated.

Understanding the wisdom of the Eisenhower Matrix—then ignoring it when things get hectic—is a startup tradition.

10 On Nov. 17, 2023, at approximately noon California time, OpenAI’s board informed Altman of his immediate removal as CEO. He was notified of his firing roughly 5 to 10 minutes before the public announcement, during a Google Meet session, while he was watching the Las Vegas Grand Prix.

When the news emerged that the board had fired you as CEO, it was shocking. But you seem like a person with a strong EQ. Did you detect any signs of tension before that? And did you know that you were the tension?

I don’t think I’m a person with a strong EQ at all, but even for me this was over the line of where I could detect that there was tension. You know, we kind of had this ongoing thing about safety versus capability and the role of a board and how to balance all this stuff. So I knew there was tension, and I’m not a high-EQ person, so there’s probably even more.

A lot of annoying things happened that first weekend. My memory of the time—and I may get the details wrong—so they fired me at noon on a Friday. A bunch of other people quit Friday night. By late Friday night I was like, “We’re just going to go start a new AGI effort.” Later Friday night, some of the executive team was like, “Um, we think we might get this undone. Chill out, just wait.”

Saturday morning, two of the board members called and wanted to talk about me coming back. I was initially just supermad and said no. And then I was like, “OK, fine.” I really care about [OpenAI]. But I was like, “Only if the whole board quits.” I wish I had taken a different tack than that, but at the time it felt like a just thing to ask for. Then we really disagreed over the board for a while. We were trying to negotiate a new board. They had some ideas I thought were ridiculous. I had some ideas they thought were ridiculous. But I thought we were [generally] agreeing. And then—when I got the most mad in the whole period—it went on all day Sunday. Saturday into Sunday they kept saying, “It’s almost done. We’re just waiting for legal advice, but board consents are being drafted.” I kept saying, “I’m keeping the company together. You have all the power. Are you sure you’re telling me the truth here?” “Yeah, you’re coming back. You’re coming back.”

And then Sunday night they shock-announce that Emmett Shear was the new CEO. And I was like, “All right, now I’m f—ing really done,” because that was real deception. Monday morning rolls around, all these people threaten to quit, and then they’re like, “OK, we need to reverse course here.”

OpenAI’s San Francisco offices on March 10, 2023. Photographer: Jim Wilson/The New York Times

The board says there was an internal investigation that concluded you weren’t “consistently candid” in your communications with them. That’s a statement that’s specific—they think you were lying or withholding some information—but also vague, because it doesn’t say what specifically you weren’t being candid about. Do you now know what they were referring to?

I’ve heard different versions. There was this whole thing of, like, “Sam didn’t even tell the board that he was gonna launch ChatGPT.” And I have a different memory and interpretation of that. But what is true is I definitely was not like, “We’re gonna launch this thing that is gonna be a huge deal.” And I think there’s been an unfair characterization of a number of things like that. The one thing I’m more aware of is, I had had issues with various board members on what I viewed as conflicts or otherwise problematic behavior, and they were not happy with the way that I tried to get them off the board. Lesson learned on that.

You recognized at some point that the structure of [OpenAI] was going to smother the company, that it might kill it in the crib. Because a mission-driven nonprofit could never compete for the computing power or make the rapid pivots necessary for OpenAI to thrive. The board was made up of originalists who put purity over survival. So you started making decisions to set up OpenAI to compete, which required being a little sneaky, which the board—

I don’t think I was doing things that were sneaky. I think the most I would say is, in the spirit of moving really fast, the board did not understand the full picture. There was something that came up about “Sam owning the startup fund, and he didn’t tell us about this.” And what happened there is because we have this complicated structure: OpenAI itself could not own it, nor could someone who owned equity in OpenAI. And I happened to be the person who didn’t own equity in OpenAI. So I was temporarily the owner or GP 11 of it until we got a structure set up to transfer it. I have a different opinion about whether the board should have known about that or not. But should there be extra clarity to communicate things like that, where there’s even the appearance of doing stuff? Yeah, I’ll take that feedback. But that’s not sneaky. It’s a crazy year, right? It’s a company that’s moving a million miles an hour in a lot of different ways. I would encourage you to talk to any current board member 12 and ask if they feel like I’ve ever done anything sneaky, because I make it a point not to do that.

11 General partner. According to a Securities and Exchange Commission filing on March 29, 2024, the new general partner of OpenAI’s startup fund is Ian Hathaway. The fund has roughly $175 million available to invest in AI-focused startups.

12 OpenAI’s current board is made up of Altman and:

I think the previous board was genuine in their level of conviction and concern about AGI going wrong. There’s a thing that one of those board members said to the team here during that weekend that people kind of make fun of her for, 13 which is it could be consistent with the mission of the nonprofit board to destroy the company. And I view that—that’s what courage of convictions actually looks like. I think she meant that genuinely. And although I totally disagree with all specific conclusions and actions, I respect conviction like that, and I think the old board was acting out of misplaced but genuine conviction in what they believed was right. And maybe also that, like, AGI was right around the corner and we weren’t being responsible with it. So I can hold respect for that while totally disagreeing with the details of everything else.

13 Former OpenAI board member Helen Toner is reported to have said there are circumstances in which destroying the company “would actually be consistent with the mission” of the board. Altman had previously confronted Toner—the director of strategy at Georgetown University’s Center for Security and Emerging Technology—about a paper she wrote criticizing OpenAI for releasing ChatGPT too quickly. She also complimented one of its competitors, Anthropic, for not “stoking the flames of AI hype” by waiting to release its chatbot.

You obviously won, because you’re sitting here. But just practicing a bit of empathy, were you not traumatized by all of this?

I totally was. The hardest part of it was not going through it, because you can do a lot on a four-day adrenaline rush. And it was very heartwarming to see the company and kind of my broader community support me. But then very quickly it was over, and I had a complete mess on my hands. And it got worse every day. It was like another government investigation, another old board member leaking fake news to the press. And all those people that I feel like really f—ed me and f—ed the company were gone, and now I had to clean up their mess. It was about this time of year [December], actually, so it gets dark at like 4:45 p.m., and it’s cold and rainy, and I would be walking through my house alone at night just, like, f—ing depressed and tired. And it felt so unfair. It was just a crazy thing to have to go through and then have no time to recover, because the house was on fire.

When you got back to the company, were you self-conscious about big decisions or announcements because you worried about how your character may be perceived? Actually, let me put that more simply. Did you feel like some people may think you were bad, and you needed to convince them that you’re good?

It was worse than that. Once everything was cleared up, it was all fine, but in the first few days no one knew anything. And so I’d be walking down the hall, and [people] would avert their eyes. It was like I had a terminal cancer diagnosis. There was sympathy, empathy, but [no one] was sure what to say. That was really tough. But I was like, “We got a complicated job to do. I’m gonna keep doing this.”

Can you describe how you actually run the company? How do you spend your days? Like, do you talk to individual engineers? Do you get walking-around time?

Let me just call up my calendar. So we do a three-hour executive team meeting on Mondays, and then, OK, yesterday and today, six one-on-ones with engineers. I’m going to the research meeting right after this. Tomorrow is a day where there’s a couple of big partnership meetings and a lot of compute meetings. There’s five meetings on building up compute. I have three product brainstorm meetings tomorrow, and I’ve got a big dinner with a major hardware partner after. That’s kind of what it looks like. A few things that are weekly rhythms, and then it’s mostly whatever comes up.

How much time do you spend communicating, internally and externally?

Way more internal. I’m not a big inspirational email writer, but lots of one-on-one, small-group meetings and then a lot of stuff over Slack.

Oh, man. God bless you. You get into the muck?

I’m a big Slack user. You can get a lot of data in the muck. I mean, there’s nothing that’s as good as being in a meeting with a small research team for depth. But for breadth, man, you can get a lot that way.

You’ve previously discussed stepping in with a very strong point of view about how ChatGPT should look and what the user experience should be. Are there places where you feel your competency requires you to be more of a player than a coach?

At this scale? Not really. I had dinner with the Sora 14 team last night, and I had pages of written, fairly detailed suggestions of things. But that’s unusual. Or the meeting after this, I have a very specific pitch to the research team of what I think they should do over the next three months and quite a lot of granular detail, but that’s also unusual.

14 Sora is OpenAI’s advanced visual AI generator, released to the public on Dec. 9, 2024.

We’ve talked a little about how scientific research can sometimes be in conflict with a corporate structure. You’ve put research in a different building from the rest of the company, a couple of miles away. Is there some symbolic intent behind that?

Uh, no, that’s just logistical, space planning. We will get to a big campus all at once at some point. Research will still have its own area. Protecting the core of research is really critical to what we do.

The normal way a Silicon Valley company goes is you start up as a product company. You get really good at that. You build up to this massive scale. And as you build up this massive scale, revenue growth naturally slows down as a percentage, usually. And at some point the CEO gets the idea that he or she is going to start a research lab to come up with a bunch of new ideas and drive further growth. And that has worked a couple of times in history. Famously for Bell Labs and Xerox PARC. Usually it doesn’t. Usually you get a very good product company and a very bad research lab. We’re very fortunate that the little product company we bolted on is the fastest-growing tech company maybe ever—certainly in a long time. But that could easily subsume the magic of research, and I do not intend to let that happen.

We are here to build AGI and superintelligence and all the things that come beyond that. There are many wonderful things that are going to happen to us along the way, any of which could very reasonably distract us from the grand prize. I think it’s really important not to get distracted.

As a company, you’ve sort of stopped publicly speaking about AGI. You started talking about AI and levels, and yet individually you talk about AGI.

I think “AGI” has become a very sloppy term. If you look at our levels, our five levels, you can find people that would call each of those AGI, right? And the hope of the levels is to have some more specific grounding on where we are and kind of like how progress is going, rather than is it AGI, or is it not AGI?

What’s the threshold where you’re going to say, “OK, we’ve achieved AGI now”?

The very rough way I try to think about it is when an AI system can do what very skilled humans in important jobs can do—I’d call that AGI. There’s then a bunch of follow-on questions like, well, is it the full job or only part of it? Can it start as a computer program and decide it wants to become a doctor? Can it do what the best people in the field can do or the 98th percentile? How autonomous is it? I don’t have deep, precise answers there yet, but if you could hire an AI as a remote employee to be a great software engineer, I think a lot of people would say, “OK, that’s AGI-ish.”

Now we’re going to move the goalposts, always, which is why this is hard, but I’ll stick with that as an answer. And then when I think about superintelligence, the key thing to me is, can this system rapidly increase the rate of scientific discovery that happens on planet Earth?

You now have more than 300 million users. What are you learning from their behavior that’s changed your understanding of ChatGPT?

Talking to people about what they use ChatGPT for, and what they don’t, has been very informative in our product planning. A thing that used to come up all the time is it was clear people were trying to use ChatGPT for search a lot, and that actually wasn’t something that we had in mind when we first launched it. And it was terrible for that. But that became very clearly an important thing to build. And honestly, since we’ve launched search in ChatGPT, I almost don’t use Google anymore. And I don’t think it would have been obvious to me that ChatGPT was going to replace my use of Google before we launched it, when we just had an internal prototype.

Another thing we learned from users: how much people are relying on it for medical advice. Many people who work at OpenAI get really heartwarming emails when people are like, “I was sick for years, no doctor told me what I had. I finally put all my symptoms and test results into ChatGPT—it said I had this rare disease. I went to a doctor, and they gave me this thing, and I’m totally cured.” That’s an extreme example, but things like that happen a lot, and that has taught us that people want this and we should build more of it.

Your products have had a lot of prices, from $0 to $20 to $200—Bloomberg reported on the possibility of a $2,000 tier. How do you price technology that’s never existed before? Is it market research? A finger in the wind?

We launched ChatGPT for free, and then people started using it a lot, and we had to have some way to pay for it. I believe we tested two prices, $20 and $42. People thought $42 was a little too much. They were happy to pay $20. We picked $20. Probably it was late December of 2022 or early January. It was not a rigorous “hire someone and do a pricing study” thing.

There’s other directions that we think about. A lot of customers are telling us they want usage-based pricing. You know, “Some months I might need to spend $1,000 on compute, some months I want to spend very little.” I am old enough that I remember when we had dial-up internet, and AOL gave you 10 hours a month or five hours a month or whatever your package was. And I hated that. I hated being on the clock, so I don’t want that kind of a vibe. But there’s other ones I can imagine that still make sense, that are somehow usage-based.

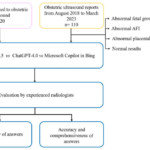

What does your safety committee look like now? How has it changed in the past year or 18 months?

One thing that’s a little confusing—also to us internally—is we have many different safety things. So we have an internal-only safety advisory group [SAG] that does technical studies of systems and presents a view. We have an SSC [safety and security committee], which is part of the board. We have the DSP 15 with Microsoft. And so you have an internal thing, a board thing and a Microsoft joint board. We are trying to figure out how to streamline that.

15 The Deployment Safety Board, with members from OpenAI and Microsoft, approves any model deployment over a certain capability threshold.

And are you on all three?

That’s a good question. So the SAG sends their reports to me, but I don’t think I’m actually formally on it. But the procedure is: They make one, they send it to me. I sort of say, “OK, I agree with this” or not, send it to the board. The SSC, I am not on. The DSP, I am on. Now that we have a better picture of what our safety process looks like, I expect to find a way to streamline that.

Has your sense of what the dangers actually might be evolved?

I still have roughly the same short-, medium- and long-term risk profiles. I still expect that on cybersecurity and bio stuff, 16 we’ll see serious, or potentially serious, short-term issues that need mitigation. Long term, as you think about a system that really just has incredible capability, there’s risks that are probably hard to precisely imagine and model. But I can simultaneously think that these risks are real and also believe that the only way to appropriately address them is to ship product and learn.

16 In September 2024, OpenAI acknowledged that its latest AI models have increased the risk of misuse in creating bioweapons. In May 2023, Altman joined hundreds of other signatories to a statement highlighting the existential risks posed by AI.

When it comes to the immediate future, the industry seems to have coalesced around three potential roadblocks to progress: scaling the models, chip scarcity and energy scarcity. I know they commingle, but can you rank those in terms of your concern?

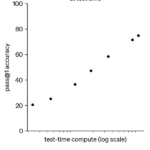

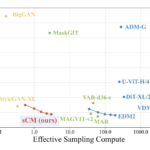

We have a plan that I feel pretty good about on each category. Scaling the models, we continue to make technical progress, capability progress, safety progress, all together. I think 2025 will be an incredible year. Do you know this thing called the ARC-AGI challenge? Five years ago this group put together this prize as a North Star toward AGI. They wanted to make a benchmark that they thought would be really hard. The model we’re announcing on Friday 17 passed this benchmark. For five years it sat there, unsolved. It consists of problems like this. 18 They said if you can score 85% on this, we’re going to consider that a “pass.” And our system—with no custom work, just out of the box—got an 87.5%. 19 And we have very promising research and better models to come.

17 OpenAI introduced Model o3 on Dec. 20. It should be available to users in early 2025. The previous model was o1, but the Information reported that OpenAI skipped over o2 to avoid a potential conflict with British telecommunications provider 02.

18 On my laptop, Altman called up the ARC-AGI website, which displayed a series of bewildering abstract grids. The abstraction is the point; to “solve” the grids and achieve AGI, an AI model must rely more on reason than its training data.

19 According to ARC-AGI: “OpenAI’s new o3 system—trained on the ARC-AGI-1 Public Training set—has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.”

We have been hard at work on the whole [chip] supply chain, all the partners. We have people to build data centers and make chips for us. We have our own chip effort here. We have a wonderful partnership with Nvidia, just an absolutely incredible company. And we’ll talk more about this next year, but now is the time for us to scale chips.

Nvidia Corp. CEO Jensen Huang speaking at an event in Tokyo on Nov. 13, 2024. Photographer: Kyodo/AP Images

Fusion is going to work. Um. On what time frame?

Soon. Well, soon there will be a demonstration of net-gain fusion. You then have to build a system that doesn’t break. You have to scale it up. You have to figure out how to build a factory—build a lot of them—and you have to get regulatory approval. And that will take, you know, years altogether? But I would expect [Helion 20] will show you that fusion works soon.

In the short term, is there any way to sustain AI’s growth without going backward on climate goals?

Yes, but none that is as good, in my opinion, as quickly permitting fusion reactors. I think our particular kind of fusion is such a beautiful approach that we should just race toward that and be done.

A lot of what you just said interacts with the government. We have a new president coming. You made a personal $1 million donation to the inaugural fund. Why?

He’s the president of the United States. I support any president.

I understand why it makes sense for OpenAI to be seen supporting a president who’s famous for keeping score of who’s supporting him, but this was a personal donation. Donald Trump opposes many of the things you’ve previously supported. Am I wrong to think the donation is less an act of patriotic conviction and more an act of fealty?

I don’t support everything that Trump does or says or thinks. I don’t support everything that Biden says or does or thinks. But I do support the United States of America, and I will work to the degree I’m able to with any president for the good of the country. And particularly for the good of what I think is this huge moment that has got to transcend any political issues. I think AGI will probably get developed during this president’s term, and getting that right seems really important. Supporting the inauguration, I think that’s a relatively small thing. I don’t view that as a big decision either way. But I do think we all should wish for the president’s success.

He’s said he hates the Chips Act. You supported the Chips Act.

I actually don’t. I think the Chips Act was better than doing nothing but not the thing that we should have done. And I think there’s a real opportunity to do something much better as a follow-on. I don’t think the Chips Act has been as effective as any of us hoped.

Trump and Musk talk ringside during the UFC 309 event at Madison Square Garden in New York on Nov. 16. Photographer: Chris Unger/Zuffa LLC

Elon 21 is clearly going to be playing some role in this administration. He’s suing you. He’s competing with you. I saw your comments at DealBook that you think he’s above using his position to engage in any funny business as it relates to AI.

21 C’mon, how many Elons do you know?

But if I may: In the past few years he bought Twitter, then sued to get out of buying Twitter. He replatformed Alex Jones. He challenged Zuckerberg to a cage match. That’s just kind of the tip of the funny-business iceberg. So do you really believe that he’s going to—

Oh, I think he’ll do all sorts of bad s—. I think he’ll continue to sue us and drop lawsuits and make new lawsuits and whatever else. He hasn’t challenged me to a cage match yet, but I don’t think he was that serious about it with Zuck, either, it turned out. As you pointed out, he says a lot of things, starts them, undoes them, gets sued, sues, gets in fights with the government, gets investigated by the government. That’s just Elon being Elon. The question was, will he abuse his political power of being co-president, or whatever he calls himself now, to mess with a business competitor? I don’t think he’ll do that. I genuinely don’t. May turn out to be proven wrong.

When the two of you were working together at your best, how would you describe what you each brought to the relationship?

Maybe like a complementary spirit. We don’t know exactly what this is going to be or what we’re going to do or how this is going to go, but we have a shared conviction that this is important, and this is the rough direction to push and how to course-correct.

I’m curious what the actual working relationship was like.

I don’t remember any big blowups with Elon until the fallout that led to the departure. But until then, for all of the stories—people talk about how he berates people and blows up and whatever, I hadn’t experienced that.

Are you surprised by how much capital he’s been able to raise, specifically from the Middle East, for xAI?

No. No. They have a lot of capital. It’s the industry people want. Elon is Elon.

Let’s presume you’re right and there’s positive intent from Elon and the administration. What’s the most helpful thing the Trump administration can do for AI in 2025?

US-built infrastructure and lots of it. The thing I really deeply agree with the president on is, it is wild how difficult it has become to build things in the United States. Power plants, data centers, any of that kind of stuff. I understand how bureaucratic cruft builds up, but it’s not helpful to the country in general. It’s particularly not helpful when you think about what needs to happen for the US to lead AI. And the US really needs to lead AI.

More On Bloomberg

Noticias

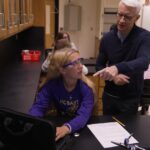

Revivir el compromiso en el aula de español: un desafío musical con chatgpt – enfoque de la facultad

Noticias

5 indicaciones de chatgpt que pueden ayudar a los adolescentes a lanzar una startup

Teen emprendedor que usa chatgpt para ayudarlo con su negocio

El emprendimiento adolescente sigue en aumento. Según Junior Achievement Research, el 66% de los adolescentes estadounidenses de entre 13 y 17 años dicen que es probable que considere comenzar un negocio como adultos, con el monitor de emprendimiento global 2023-2024 que encuentra que el 24% de los jóvenes de 18 a 24 años son actualmente empresarios. Estos jóvenes fundadores no son solo soñando, están construyendo empresas reales que generan ingresos y crean un impacto social, y están utilizando las indicaciones de ChatGPT para ayudarlos.

En Wit (lo que sea necesario), la organización que fundó en 2009, hemos trabajado con más de 10,000 jóvenes empresarios. Durante el año pasado, he observado un cambio en cómo los adolescentes abordan la planificación comercial. Con nuestra orientación, están utilizando herramientas de IA como ChatGPT, no como atajos, sino como socios de pensamiento estratégico para aclarar ideas, probar conceptos y acelerar la ejecución.

Los emprendedores adolescentes más exitosos han descubierto indicaciones específicas que los ayudan a pasar de una idea a otra. Estas no son sesiones genéricas de lluvia de ideas: están utilizando preguntas específicas que abordan los desafíos únicos que enfrentan los jóvenes fundadores: recursos limitados, compromisos escolares y la necesidad de demostrar sus conceptos rápidamente.

Aquí hay cinco indicaciones de ChatGPT que ayudan constantemente a los emprendedores adolescentes a construir negocios que importan.

1. El problema del primer descubrimiento chatgpt aviso

“Me doy cuenta de que [specific group of people]

luchar contra [specific problem I’ve observed]. Ayúdame a entender mejor este problema explicando: 1) por qué existe este problema, 2) qué soluciones existen actualmente y por qué son insuficientes, 3) cuánto las personas podrían pagar para resolver esto, y 4) tres formas específicas en que podría probar si este es un problema real que vale la pena resolver “.

Un adolescente podría usar este aviso después de notar que los estudiantes en la escuela luchan por pagar el almuerzo. En lugar de asumir que entienden el alcance completo, podrían pedirle a ChatGPT que investigue la deuda del almuerzo escolar como un problema sistémico. Esta investigación puede llevarlos a crear un negocio basado en productos donde los ingresos ayuden a pagar la deuda del almuerzo, lo que combina ganancias con el propósito.

Los adolescentes notan problemas de manera diferente a los adultos porque experimentan frustraciones únicas, desde los desafíos de las organizaciones escolares hasta las redes sociales hasta las preocupaciones ambientales. Según la investigación de Square sobre empresarios de la Generación de la Generación Z, el 84% planea ser dueños de negocios dentro de cinco años, lo que los convierte en candidatos ideales para las empresas de resolución de problemas.

2. El aviso de chatgpt de chatgpt de chatgpt de realidad de la realidad del recurso

“Soy [age] años con aproximadamente [dollar amount] invertir y [number] Horas por semana disponibles entre la escuela y otros compromisos. Según estas limitaciones, ¿cuáles son tres modelos de negocio que podría lanzar de manera realista este verano? Para cada opción, incluya costos de inicio, requisitos de tiempo y los primeros tres pasos para comenzar “.

Este aviso se dirige al elefante en la sala: la mayoría de los empresarios adolescentes tienen dinero y tiempo limitados. Cuando un empresario de 16 años emplea este enfoque para evaluar un concepto de negocio de tarjetas de felicitación, puede descubrir que pueden comenzar con $ 200 y escalar gradualmente. Al ser realistas sobre las limitaciones por adelantado, evitan el exceso de compromiso y pueden construir hacia objetivos de ingresos sostenibles.

Según el informe de Gen Z de Square, el 45% de los jóvenes empresarios usan sus ahorros para iniciar negocios, con el 80% de lanzamiento en línea o con un componente móvil. Estos datos respaldan la efectividad de la planificación basada en restricciones: cuando funcionan los adolescentes dentro de las limitaciones realistas, crean modelos comerciales más sostenibles.

3. El aviso de chatgpt del simulador de voz del cliente

“Actúa como un [specific demographic] Y dame comentarios honestos sobre esta idea de negocio: [describe your concept]. ¿Qué te excitaría de esto? ¿Qué preocupaciones tendrías? ¿Cuánto pagarías de manera realista? ¿Qué necesitaría cambiar para que se convierta en un cliente? “

Los empresarios adolescentes a menudo luchan con la investigación de los clientes porque no pueden encuestar fácilmente a grandes grupos o contratar firmas de investigación de mercado. Este aviso ayuda a simular los comentarios de los clientes haciendo que ChatGPT adopte personas específicas.

Un adolescente que desarrolla un podcast para atletas adolescentes podría usar este enfoque pidiéndole a ChatGPT que responda a diferentes tipos de atletas adolescentes. Esto ayuda a identificar temas de contenido que resuenan y mensajes que se sienten auténticos para el público objetivo.

El aviso funciona mejor cuando se vuelve específico sobre la demografía, los puntos débiles y los contextos. “Actúa como un estudiante de último año de secundaria que solicita a la universidad” produce mejores ideas que “actuar como un adolescente”.

4. El mensaje mínimo de diseñador de prueba viable chatgpt

“Quiero probar esta idea de negocio: [describe concept] sin gastar más de [budget amount] o más de [time commitment]. Diseñe tres experimentos simples que podría ejecutar esta semana para validar la demanda de los clientes. Para cada prueba, explique lo que aprendería, cómo medir el éxito y qué resultados indicarían que debería avanzar “.

Este aviso ayuda a los adolescentes a adoptar la metodología Lean Startup sin perderse en la jerga comercial. El enfoque en “This Week” crea urgencia y evita la planificación interminable sin acción.

Un adolescente que desea probar un concepto de línea de ropa podría usar este indicador para diseñar experimentos de validación simples, como publicar maquetas de diseño en las redes sociales para evaluar el interés, crear un formulario de Google para recolectar pedidos anticipados y pedirles a los amigos que compartan el concepto con sus redes. Estas pruebas no cuestan nada más que proporcionar datos cruciales sobre la demanda y los precios.

5. El aviso de chatgpt del generador de claridad de tono

“Convierta esta idea de negocio en una clara explicación de 60 segundos: [describe your business]. La explicación debe incluir: el problema que resuelve, su solución, a quién ayuda, por qué lo elegirían sobre las alternativas y cómo se ve el éxito. Escríbelo en lenguaje de conversación que un adolescente realmente usaría “.

La comunicación clara separa a los empresarios exitosos de aquellos con buenas ideas pero una ejecución deficiente. Este aviso ayuda a los adolescentes a destilar conceptos complejos a explicaciones convincentes que pueden usar en todas partes, desde las publicaciones en las redes sociales hasta las conversaciones con posibles mentores.

El énfasis en el “lenguaje de conversación que un adolescente realmente usaría” es importante. Muchas plantillas de lanzamiento comercial suenan artificiales cuando se entregan jóvenes fundadores. La autenticidad es más importante que la jerga corporativa.

Más allá de las indicaciones de chatgpt: estrategia de implementación

La diferencia entre los adolescentes que usan estas indicaciones de manera efectiva y aquellos que no se reducen a seguir. ChatGPT proporciona dirección, pero la acción crea resultados.

Los jóvenes empresarios más exitosos con los que trabajo usan estas indicaciones como puntos de partida, no de punto final. Toman las sugerencias generadas por IA e inmediatamente las prueban en el mundo real. Llaman a clientes potenciales, crean prototipos simples e iteran en función de los comentarios reales.

Investigaciones recientes de Junior Achievement muestran que el 69% de los adolescentes tienen ideas de negocios, pero se sienten inciertos sobre el proceso de partida, con el miedo a que el fracaso sea la principal preocupación para el 67% de los posibles empresarios adolescentes. Estas indicaciones abordan esa incertidumbre al desactivar los conceptos abstractos en los próximos pasos concretos.

La imagen más grande

Los emprendedores adolescentes que utilizan herramientas de IA como ChatGPT representan un cambio en cómo está ocurriendo la educación empresarial. Según la investigación mundial de monitores empresariales, los jóvenes empresarios tienen 1,6 veces más probabilidades que los adultos de querer comenzar un negocio, y son particularmente activos en la tecnología, la alimentación y las bebidas, la moda y los sectores de entretenimiento. En lugar de esperar clases de emprendimiento formales o programas de MBA, estos jóvenes fundadores están accediendo a herramientas de pensamiento estratégico de inmediato.

Esta tendencia se alinea con cambios más amplios en la educación y la fuerza laboral. El Foro Económico Mundial identifica la creatividad, el pensamiento crítico y la resiliencia como las principales habilidades para 2025, la capacidad de las capacidades que el espíritu empresarial desarrolla naturalmente.

Programas como WIT brindan soporte estructurado para este viaje, pero las herramientas en sí mismas se están volviendo cada vez más accesibles. Un adolescente con acceso a Internet ahora puede acceder a recursos de planificación empresarial que anteriormente estaban disponibles solo para empresarios establecidos con presupuestos significativos.

La clave es usar estas herramientas cuidadosamente. ChatGPT puede acelerar el pensamiento y proporcionar marcos, pero no puede reemplazar el arduo trabajo de construir relaciones, crear productos y servir a los clientes. La mejor idea de negocio no es la más original, es la que resuelve un problema real para personas reales. Las herramientas de IA pueden ayudar a identificar esas oportunidades, pero solo la acción puede convertirlos en empresas que importan.

Noticias

Chatgpt vs. gemini: he probado ambos, y uno definitivamente es mejor

Precio

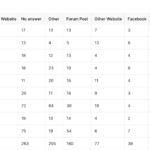

ChatGPT y Gemini tienen versiones gratuitas que limitan su acceso a características y modelos. Los planes premium para ambos también comienzan en alrededor de $ 20 por mes. Las características de chatbot, como investigaciones profundas, generación de imágenes y videos, búsqueda web y más, son similares en ChatGPT y Gemini. Sin embargo, los planes de Gemini pagados también incluyen el almacenamiento en la nube de Google Drive (a partir de 2TB) y un conjunto robusto de integraciones en las aplicaciones de Google Workspace.

Los niveles de más alta gama de ChatGPT y Gemini desbloquean el aumento de los límites de uso y algunas características únicas, pero el costo mensual prohibitivo de estos planes (como $ 200 para Chatgpt Pro o $ 250 para Gemini Ai Ultra) los pone fuera del alcance de la mayoría de las personas. Las características específicas del plan Pro de ChatGPT, como el modo O1 Pro que aprovecha el poder de cálculo adicional para preguntas particularmente complicadas, no son especialmente relevantes para el consumidor promedio, por lo que no sentirá que se está perdiendo. Sin embargo, es probable que desee las características que son exclusivas del plan Ai Ultra de Gemini, como la generación de videos VEO 3.

Ganador: Géminis

Plataformas

Puede acceder a ChatGPT y Gemini en la web o a través de aplicaciones móviles (Android e iOS). ChatGPT también tiene aplicaciones de escritorio (macOS y Windows) y una extensión oficial para Google Chrome. Gemini no tiene aplicaciones de escritorio dedicadas o una extensión de Chrome, aunque se integra directamente con el navegador.

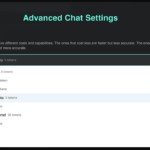

(Crédito: OpenAI/PCMAG)

Chatgpt está disponible en otros lugares, Como a través de Siri. Como se mencionó, puede acceder a Gemini en las aplicaciones de Google, como el calendario, Documento, ConducirGmail, Mapas, Mantener, FotosSábanas, y Música de YouTube. Tanto los modelos de Chatgpt como Gemini también aparecen en sitios como la perplejidad. Sin embargo, obtiene la mayor cantidad de funciones de estos chatbots en sus aplicaciones y portales web dedicados.

Las interfaces de ambos chatbots son en gran medida consistentes en todas las plataformas. Son fáciles de usar y no lo abruman con opciones y alternar. ChatGPT tiene algunas configuraciones más para jugar, como la capacidad de ajustar su personalidad, mientras que la profunda interfaz de investigación de Gemini hace un mejor uso de los bienes inmuebles de pantalla.

Ganador: empate

Modelos de IA

ChatGPT tiene dos series primarias de modelos, la serie 4 (su línea de conversación, insignia) y la Serie O (su compleja línea de razonamiento). Gemini ofrece de manera similar una serie Flash de uso general y una serie Pro para tareas más complicadas.

Los últimos modelos de Chatgpt son O3 y O4-Mini, y los últimos de Gemini son 2.5 Flash y 2.5 Pro. Fuera de la codificación o la resolución de una ecuación, pasará la mayor parte de su tiempo usando los modelos de la serie 4-Series y Flash. A continuación, puede ver cómo funcionan estos modelos en una variedad de tareas. Qué modelo es mejor depende realmente de lo que quieras hacer.

Ganador: empate

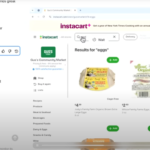

Búsqueda web

ChatGPT y Gemini pueden buscar información actualizada en la web con facilidad. Sin embargo, ChatGPT presenta mosaicos de artículos en la parte inferior de sus respuestas para una lectura adicional, tiene un excelente abastecimiento que facilita la vinculación de reclamos con evidencia, incluye imágenes en las respuestas cuando es relevante y, a menudo, proporciona más detalles en respuesta. Gemini no muestra nombres de fuente y títulos de artículos completos, e incluye mosaicos e imágenes de artículos solo cuando usa el modo AI de Google. El abastecimiento en este modo es aún menos robusto; Google relega las fuentes a los caretes que se pueden hacer clic que no resaltan las partes relevantes de su respuesta.

Como parte de sus experiencias de búsqueda en la web, ChatGPT y Gemini pueden ayudarlo a comprar. Si solicita consejos de compra, ambos presentan mosaicos haciendo clic en enlaces a los minoristas. Sin embargo, Gemini generalmente sugiere mejores productos y tiene una característica única en la que puede cargar una imagen tuya para probar digitalmente la ropa antes de comprar.

Ganador: chatgpt

Investigación profunda

ChatGPT y Gemini pueden generar informes que tienen docenas de páginas e incluyen más de 50 fuentes sobre cualquier tema. La mayor diferencia entre los dos se reduce al abastecimiento. Gemini a menudo cita más fuentes que CHATGPT, pero maneja el abastecimiento en informes de investigación profunda de la misma manera que lo hace en la búsqueda en modo AI, lo que significa caretas que se puede hacer clic sin destacados en el texto. Debido a que es más difícil conectar las afirmaciones en los informes de Géminis a fuentes reales, es más difícil creerles. El abastecimiento claro de ChatGPT con destacados en el texto es más fácil de confiar. Sin embargo, Gemini tiene algunas características de calidad de vida en ChatGPT, como la capacidad de exportar informes formateados correctamente a Google Docs con un solo clic. Su tono también es diferente. Los informes de ChatGPT se leen como publicaciones de foro elaboradas, mientras que los informes de Gemini se leen como documentos académicos.

Ganador: chatgpt

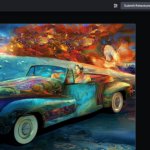

Generación de imágenes

La generación de imágenes de ChatGPT impresiona independientemente de lo que solicite, incluso las indicaciones complejas para paneles o diagramas cómicos. No es perfecto, pero los errores y la distorsión son mínimos. Gemini genera imágenes visualmente atractivas más rápido que ChatGPT, pero rutinariamente incluyen errores y distorsión notables. Con indicaciones complicadas, especialmente diagramas, Gemini produjo resultados sin sentido en las pruebas.

Arriba, puede ver cómo ChatGPT (primera diapositiva) y Géminis (segunda diapositiva) les fue con el siguiente mensaje: “Genere una imagen de un estudio de moda con una decoración simple y rústica que contrasta con el espacio más agradable. Incluya un sofá marrón y paredes de ladrillo”. La imagen de ChatGPT limita los problemas al detalle fino en las hojas de sus plantas y texto en su libro, mientras que la imagen de Gemini muestra problemas más notables en su tubo de cordón y lámpara.

Ganador: chatgpt

¡Obtenga nuestras mejores historias!

Toda la última tecnología, probada por nuestros expertos

Regístrese en el boletín de informes de laboratorio para recibir las últimas revisiones de productos de PCMAG, comprar asesoramiento e ideas.

Al hacer clic en Registrarme, confirma que tiene más de 16 años y acepta nuestros Términos de uso y Política de privacidad.

¡Gracias por registrarse!

Su suscripción ha sido confirmada. ¡Esté atento a su bandeja de entrada!

Generación de videos

La generación de videos de Gemini es la mejor de su clase, especialmente porque ChatGPT no puede igualar su capacidad para producir audio acompañante. Actualmente, Google bloquea el último modelo de generación de videos de Gemini, VEO 3, detrás del costoso plan AI Ultra, pero obtienes más videos realistas que con ChatGPT. Gemini también tiene otras características que ChatGPT no, como la herramienta Flow Filmmaker, que le permite extender los clips generados y el animador AI Whisk, que le permite animar imágenes fijas. Sin embargo, tenga en cuenta que incluso con VEO 3, aún necesita generar videos varias veces para obtener un gran resultado.

En el ejemplo anterior, solicité a ChatGPT y Gemini a mostrarme un solucionador de cubos de Rubik Rubik que resuelva un cubo. La persona en el video de Géminis se ve muy bien, y el audio acompañante es competente. Al final, hay una buena atención al detalle con el marco que se desplaza, simulando la detención de una grabación de selfies. Mientras tanto, Chatgpt luchó con su cubo, distorsionándolo en gran medida.

Ganador: Géminis

Procesamiento de archivos

Comprender los archivos es una fortaleza de ChatGPT y Gemini. Ya sea que desee que respondan preguntas sobre un manual, editen un currículum o le informen algo sobre una imagen, ninguno decepciona. Sin embargo, ChatGPT tiene la ventaja sobre Gemini, ya que ofrece un reconocimiento de imagen ligeramente mejor y respuestas más detalladas cuando pregunta sobre los archivos cargados. Ambos chatbots todavía a veces inventan citas de documentos proporcionados o malinterpretan las imágenes, así que asegúrese de verificar sus resultados.

Ganador: chatgpt

Escritura creativa

Chatgpt y Gemini pueden generar poemas, obras, historias y más competentes. CHATGPT, sin embargo, se destaca entre los dos debido a cuán únicas son sus respuestas y qué tan bien responde a las indicaciones. Las respuestas de Gemini pueden sentirse repetitivas si no calibra cuidadosamente sus solicitudes, y no siempre sigue todas las instrucciones a la carta.

En el ejemplo anterior, solicité ChatGPT (primera diapositiva) y Gemini (segunda diapositiva) con lo siguiente: “Sin hacer referencia a nada en su memoria o respuestas anteriores, quiero que me escriba un poema de verso gratuito. Preste atención especial a la capitalización, enjambment, ruptura de línea y puntuación. Dado que es un verso libre, no quiero un medidor familiar o un esquema de retiro de la rima, pero quiero que tenga un estilo de coohes. ChatGPT logró entregar lo que pedí en el aviso, y eso era distinto de las generaciones anteriores. Gemini tuvo problemas para generar un poema que incorporó cualquier cosa más allá de las comas y los períodos, y su poema anterior se lee de manera muy similar a un poema que generó antes.

Recomendado por nuestros editores

Ganador: chatgpt

Razonamiento complejo

Los modelos de razonamiento complejos de Chatgpt y Gemini pueden manejar preguntas de informática, matemáticas y física con facilidad, así como mostrar de manera competente su trabajo. En las pruebas, ChatGPT dio respuestas correctas un poco más a menudo que Gemini, pero su rendimiento es bastante similar. Ambos chatbots pueden y le darán respuestas incorrectas, por lo que verificar su trabajo aún es vital si está haciendo algo importante o tratando de aprender un concepto.

Ganador: chatgpt

Integración

ChatGPT no tiene integraciones significativas, mientras que las integraciones de Gemini son una característica definitoria. Ya sea que desee obtener ayuda para editar un ensayo en Google Docs, comparta una pestaña Chrome para hacer una pregunta, pruebe una nueva lista de reproducción de música de YouTube personalizada para su gusto o desbloquee ideas personales en Gmail, Gemini puede hacer todo y mucho más. Es difícil subestimar cuán integrales y poderosas son realmente las integraciones de Géminis.

Ganador: Géminis

Asistentes de IA

ChatGPT tiene GPT personalizados, y Gemini tiene gemas. Ambos son asistentes de IA personalizables. Tampoco es una gran actualización sobre hablar directamente con los chatbots, pero los GPT personalizados de terceros agregan una nueva funcionalidad, como el fácil acceso a Canva para editar imágenes generadas. Mientras tanto, terceros no pueden crear gemas, y no puedes compartirlas. Puede permitir que los GPT personalizados accedan a la información externa o tomen acciones externas, pero las GEM no tienen una funcionalidad similar.

Ganador: chatgpt

Contexto Windows y límites de uso

La ventana de contexto de ChatGPT sube a 128,000 tokens en sus planes de nivel superior, y todos los planes tienen límites de uso dinámicos basados en la carga del servidor. Géminis, por otro lado, tiene una ventana de contexto de 1,000,000 token. Google no está demasiado claro en los límites de uso exactos para Gemini, pero también son dinámicos dependiendo de la carga del servidor. Anecdóticamente, no pude alcanzar los límites de uso usando los planes pagados de Chatgpt o Gemini, pero es mucho más fácil hacerlo con los planes gratuitos.

Ganador: Géminis

Privacidad

La privacidad en Chatgpt y Gemini es una bolsa mixta. Ambos recopilan cantidades significativas de datos, incluidos todos sus chats, y usan esos datos para capacitar a sus modelos de IA de forma predeterminada. Sin embargo, ambos le dan la opción de apagar el entrenamiento. Google al menos no recopila y usa datos de Gemini para fines de capacitación en aplicaciones de espacio de trabajo, como Gmail, de forma predeterminada. ChatGPT y Gemini también prometen no vender sus datos o usarlos para la orientación de anuncios, pero Google y OpenAI tienen historias sórdidas cuando se trata de hacks, filtraciones y diversos fechorías digitales, por lo que recomiendo no compartir nada demasiado sensible.

Ganador: empate

-

Startups2 años ago

Startups2 años agoRemove.bg: La Revolución en la Edición de Imágenes que Debes Conocer

-

Tutoriales2 años ago

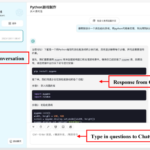

Tutoriales2 años agoCómo Comenzar a Utilizar ChatGPT: Una Guía Completa para Principiantes

-

Startups1 año ago

Startups1 año agoStartups de IA en EE.UU. que han recaudado más de $100M en 2024

-

Startups2 años ago

Startups2 años agoDeepgram: Revolucionando el Reconocimiento de Voz con IA

-

Recursos2 años ago

Recursos2 años agoCómo Empezar con Popai.pro: Tu Espacio Personal de IA – Guía Completa, Instalación, Versiones y Precios

-

Recursos2 años ago

Recursos2 años agoPerplexity aplicado al Marketing Digital y Estrategias SEO

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial de UC Berkeley estratégico para negocios

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial Aplicada de 4Geeks Academy 2024