Noticias

Operai tiene poco recurso legal contra Deepseek, dicen los expertos en derecho tecnológico.

Published

1 año agoon

- Operai y la Casa Blanca han acusado a Deepseek de usar ChatGPT para entrenar a bajo precio a su nuevo chatbot.

- Los expertos en derecho tecnológico dicen que OpenAi tiene poco recurso bajo la propiedad intelectual y el derecho contractual.

- Los términos de uso de OpenAI pueden aplicarse, pero son en gran medida poco aplicados, dicen los expertos.

Esta semana, Openai y la Casa Blanca acusaron a Deep Speek de algo similar al robo.

En una ráfaga de declaraciones de prensa, dijeron que el advenedizo con sede en China había bombardeado los chatbots de OpenAi con consultas, aumentando los datos resultantes para entrenar de manera rápida y económica un modelo que ahora es casi tan bueno.

El “zar” de IA de la administración Trump, dijo que este proceso de capacitación, llamado “destilación”, equivale al robo de propiedad intelectual. Mientras tanto, Openai le dijo a Business Insider y otros puntos de venta que está investigando si “Deepseek puede haber destilado inapropiadamente nuestros modelos”.

Operai no dice que si la compañía planea seguir acciones legales, en lugar de prometer lo que un portavoz llamó “contramedidas agresivas y proactivas para proteger nuestra tecnología”.

¿Pero podrían? ¿Podrían demandar a Deepseek por los terrenos de “Robaste nuestros contenidos”, al igual que los terrenos Openai fue demandado en un reclamo de derechos de autor de 2023 presentado por el New York Times y otros medios de comunicación?

Business Insider planteó esta pregunta a los expertos en derecho de tecnología, quienes dijeron que desafiar a Deepseek en los tribunales sería una batalla cuesta arriba para OpenAi, ahora que el zapato de apropiación de contenido está en el otro pie.

Operai tendría dificultades para demostrar una propiedad intelectual o un reclamo de derechos de autor, dijeron estos abogados.

“La pregunta es si los salidas de ChatGPT”, lo que significa que las respuestas que genera en respuesta a las consultas, “son con derechos de autor en absoluto”, dijo Mason Kortz de Harvard Law School.

Eso es porque no está claro que las respuestas chatgpt escupen calificaciones como “creatividad”, dijo.

“Hay una doctrina que dice que la expresión creativa es con derechos de autor, pero los hechos e ideas no lo son”, explicó Kortz, quien enseña en la Clínica Cyberlaw de Harvard.

“Hay una gran pregunta en la ley de propiedad intelectual en este momento sobre si los resultados de una IA generativa pueden constituir una expresión creativa o si son necesariamente hechos sin protección”.

Podría abrir los dados de todos modos de todos modos, y afirmar que sus salidas en realidad son ¿protegido?

Eso sería poco probable, dijeron los abogados.

Operai ya está en el registro en el caso de derechos de autor del New York Times argumentando que la AI de capacitación es una excepción permitida de “uso justo” a la protección de derechos de autor.

Si hacen un 180 y dicen a Deepseek que el entrenamiento no es un uso justo, “eso podría volver a morderlos”, dijo Kortz. “Deepseek podría decir: ‘Oye, ¿no sabías que el entrenamiento es de uso justo?'”

Podría decirse que hay una distinción entre los casos de los tiempos y los profundos, agrega Kortz.

“Tal vez sea más transformador convertir los artículos de noticias en un modelo”, como el Times acusa a Openi de hacer, “que convertir los resultados de un modelo en otro modelo” como lo ha hecho Deepseek, dijo Kortz.

“Pero esto todavía pone a Openai en una situación bastante complicada con respecto a la línea de que ha sido remolcado con respecto al uso justo”.

Es más probable que un incumplimiento de la demanda por contrato sea

Una demanda por incumplimiento de contrato es mucho más probable que una demanda basada en IP, aunque viene con su propio conjunto de problemas, dijo Anupam Chander, quien enseña ley de tecnología en la Universidad de Georgetown.

Historias relacionadas

Los términos de servicio para chatbots tecnológicos grandes como los desarrollados por OpenAI y Anthopic prohíben usar su contenido como forraje de capacitación para un modelo de IA competidores.

“Entonces, tal vez esa sea la demanda que posiblemente podría presentar, un reclamo basado en contratos, no un reclamo basado en IP”, dijo Chander.

“No ‘copiaste algo de mí’, pero que te beneficiaste de mi modelo para hacer algo que no se le permitió hacer bajo nuestro contrato”.

Hay un posible enganche, Chander y Kortz dicen. Los términos de servicio de OpenAI requieren que la mayoría de las reclamaciones se resuelvan mediante arbitraje, no demandas. Hay una excepción para las demandas “para detener el uso o abuso no autorizados del servicio o infracción de propiedad intelectual o apropiación indebida.

Sin embargo, hay un enganche más grande, dicen los expertos.

“Debe saber que el brillante erudito Mark Lemley y un coautor argumentan que los términos de uso de IA probablemente no sean ejecutables”, dijo Chander. Se refería a un artículo del 10 de enero, el espejismo de las restricciones de términos de uso de inteligencia artificial, por Mark A. Lemley de Stanford Law, y Peter Henderson del Centro de Tecnología de la Información de la Universidad de Princeton.

Hasta la fecha, “ningún creador de modelos ha tratado de hacer cumplir estos términos con sanciones monetarias o medidas cautelares”, dice el periódico.

“Esto es probable por una buena razón: creemos que la ejecución legal de estas licencias es cuestionable”, dice. Esto se debe en parte a que los resultados del modelo “no son en gran medida con derechos de autor” y porque las leyes como la Ley de Derechos de Autor Digital Millennium y la Ley de Fraude y Abuso de la Computadora “ofrecen un recurso limitado”, argumenta.

“Creo que probablemente no sean envejecidos”, dijo Lemley a BI de los términos de servicio de OpenAi, “porque Deepseek no tomó nada con derechos de autor por OpenAi, y porque los tribunales generalmente no harán cumplir los acuerdos para no competir en ausencia de un derecho IP que evitaría esa competencia “.

Las demandas entre las partes en diferentes naciones, cada una con sus propios sistemas legales y de aplicación, siempre son complicadas, dijo Kortz.

Incluso si OpenAi despejó todos los obstáculos anteriores y ganó un juicio de un tribunal o árbitro de los Estados Unidos, “para que se vea profundamente para entregar dinero o dejar de hacer lo que está haciendo, la aplicación se reduciría al sistema legal chino”, dijo. .

Aquí, Openai estaría a merced de otra área de derecho extremadamente complicada, la aplicación de los juicios extranjeros y el equilibrio de los derechos individuales y corporativos y la soberanía nacional, que se remonta antes de la fundación de los Estados Unidos.

“Así que este es, un proceso largo, complicado y tenso”, agregó Kortz.

¿Podría OpenAi haberse protegido mejor de una incursión en destilación?

“Podrían haber usado medidas técnicas para bloquear el acceso repetido a su sitio”, dijo Lemley. “Pero hacerlo también interferiría con los clientes normales”.

Agregó: “No creo que puedan, o deberían, tener un reclamo legal válido contra la búsqueda de información que no se puede ver con el sitio público”.

Los representantes de Deepseek no respondieron de inmediato a una solicitud de comentarios.

“Sabemos que los grupos de la RPC están trabajando activamente para usar métodos, incluida lo que se conoce como destilación, para tratar de replicar modelos avanzados de IA US”, dijo la portavoz de OpenAi, Rhianna Donaldson, a BI en una declaración enviada por correo electrónico.

“Somos conscientes y revisando las indicaciones de que Deepseek puede haber destilado inapropiadamente nuestros modelos y compartirá información como sabemos más”, dijo el comunicado. “Tomamos contramedidas agresivas y proactivas para proteger nuestra tecnología y continuaremos trabajando estrechamente con el gobierno de los Estados Unidos para proteger los modelos más capaces que se están construyendo aquí”.

Noticias

5 indicaciones de chatgpt que pueden ayudar a los adolescentes a lanzar una startup

Published

8 meses agoon

5 junio, 2025

Teen emprendedor que usa chatgpt para ayudarlo con su negocio

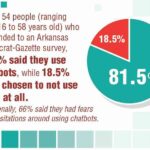

El emprendimiento adolescente sigue en aumento. Según Junior Achievement Research, el 66% de los adolescentes estadounidenses de entre 13 y 17 años dicen que es probable que considere comenzar un negocio como adultos, con el monitor de emprendimiento global 2023-2024 que encuentra que el 24% de los jóvenes de 18 a 24 años son actualmente empresarios. Estos jóvenes fundadores no son solo soñando, están construyendo empresas reales que generan ingresos y crean un impacto social, y están utilizando las indicaciones de ChatGPT para ayudarlos.

En Wit (lo que sea necesario), la organización que fundó en 2009, hemos trabajado con más de 10,000 jóvenes empresarios. Durante el año pasado, he observado un cambio en cómo los adolescentes abordan la planificación comercial. Con nuestra orientación, están utilizando herramientas de IA como ChatGPT, no como atajos, sino como socios de pensamiento estratégico para aclarar ideas, probar conceptos y acelerar la ejecución.

Los emprendedores adolescentes más exitosos han descubierto indicaciones específicas que los ayudan a pasar de una idea a otra. Estas no son sesiones genéricas de lluvia de ideas: están utilizando preguntas específicas que abordan los desafíos únicos que enfrentan los jóvenes fundadores: recursos limitados, compromisos escolares y la necesidad de demostrar sus conceptos rápidamente.

Aquí hay cinco indicaciones de ChatGPT que ayudan constantemente a los emprendedores adolescentes a construir negocios que importan.

1. El problema del primer descubrimiento chatgpt aviso

“Me doy cuenta de que [specific group of people]

luchar contra [specific problem I’ve observed]. Ayúdame a entender mejor este problema explicando: 1) por qué existe este problema, 2) qué soluciones existen actualmente y por qué son insuficientes, 3) cuánto las personas podrían pagar para resolver esto, y 4) tres formas específicas en que podría probar si este es un problema real que vale la pena resolver “.

Un adolescente podría usar este aviso después de notar que los estudiantes en la escuela luchan por pagar el almuerzo. En lugar de asumir que entienden el alcance completo, podrían pedirle a ChatGPT que investigue la deuda del almuerzo escolar como un problema sistémico. Esta investigación puede llevarlos a crear un negocio basado en productos donde los ingresos ayuden a pagar la deuda del almuerzo, lo que combina ganancias con el propósito.

Los adolescentes notan problemas de manera diferente a los adultos porque experimentan frustraciones únicas, desde los desafíos de las organizaciones escolares hasta las redes sociales hasta las preocupaciones ambientales. Según la investigación de Square sobre empresarios de la Generación de la Generación Z, el 84% planea ser dueños de negocios dentro de cinco años, lo que los convierte en candidatos ideales para las empresas de resolución de problemas.

2. El aviso de chatgpt de chatgpt de chatgpt de realidad de la realidad del recurso

“Soy [age] años con aproximadamente [dollar amount] invertir y [number] Horas por semana disponibles entre la escuela y otros compromisos. Según estas limitaciones, ¿cuáles son tres modelos de negocio que podría lanzar de manera realista este verano? Para cada opción, incluya costos de inicio, requisitos de tiempo y los primeros tres pasos para comenzar “.

Este aviso se dirige al elefante en la sala: la mayoría de los empresarios adolescentes tienen dinero y tiempo limitados. Cuando un empresario de 16 años emplea este enfoque para evaluar un concepto de negocio de tarjetas de felicitación, puede descubrir que pueden comenzar con $ 200 y escalar gradualmente. Al ser realistas sobre las limitaciones por adelantado, evitan el exceso de compromiso y pueden construir hacia objetivos de ingresos sostenibles.

Según el informe de Gen Z de Square, el 45% de los jóvenes empresarios usan sus ahorros para iniciar negocios, con el 80% de lanzamiento en línea o con un componente móvil. Estos datos respaldan la efectividad de la planificación basada en restricciones: cuando funcionan los adolescentes dentro de las limitaciones realistas, crean modelos comerciales más sostenibles.

3. El aviso de chatgpt del simulador de voz del cliente

“Actúa como un [specific demographic] Y dame comentarios honestos sobre esta idea de negocio: [describe your concept]. ¿Qué te excitaría de esto? ¿Qué preocupaciones tendrías? ¿Cuánto pagarías de manera realista? ¿Qué necesitaría cambiar para que se convierta en un cliente? “

Los empresarios adolescentes a menudo luchan con la investigación de los clientes porque no pueden encuestar fácilmente a grandes grupos o contratar firmas de investigación de mercado. Este aviso ayuda a simular los comentarios de los clientes haciendo que ChatGPT adopte personas específicas.

Un adolescente que desarrolla un podcast para atletas adolescentes podría usar este enfoque pidiéndole a ChatGPT que responda a diferentes tipos de atletas adolescentes. Esto ayuda a identificar temas de contenido que resuenan y mensajes que se sienten auténticos para el público objetivo.

El aviso funciona mejor cuando se vuelve específico sobre la demografía, los puntos débiles y los contextos. “Actúa como un estudiante de último año de secundaria que solicita a la universidad” produce mejores ideas que “actuar como un adolescente”.

4. El mensaje mínimo de diseñador de prueba viable chatgpt

“Quiero probar esta idea de negocio: [describe concept] sin gastar más de [budget amount] o más de [time commitment]. Diseñe tres experimentos simples que podría ejecutar esta semana para validar la demanda de los clientes. Para cada prueba, explique lo que aprendería, cómo medir el éxito y qué resultados indicarían que debería avanzar “.

Este aviso ayuda a los adolescentes a adoptar la metodología Lean Startup sin perderse en la jerga comercial. El enfoque en “This Week” crea urgencia y evita la planificación interminable sin acción.

Un adolescente que desea probar un concepto de línea de ropa podría usar este indicador para diseñar experimentos de validación simples, como publicar maquetas de diseño en las redes sociales para evaluar el interés, crear un formulario de Google para recolectar pedidos anticipados y pedirles a los amigos que compartan el concepto con sus redes. Estas pruebas no cuestan nada más que proporcionar datos cruciales sobre la demanda y los precios.

5. El aviso de chatgpt del generador de claridad de tono

“Convierta esta idea de negocio en una clara explicación de 60 segundos: [describe your business]. La explicación debe incluir: el problema que resuelve, su solución, a quién ayuda, por qué lo elegirían sobre las alternativas y cómo se ve el éxito. Escríbelo en lenguaje de conversación que un adolescente realmente usaría “.

La comunicación clara separa a los empresarios exitosos de aquellos con buenas ideas pero una ejecución deficiente. Este aviso ayuda a los adolescentes a destilar conceptos complejos a explicaciones convincentes que pueden usar en todas partes, desde las publicaciones en las redes sociales hasta las conversaciones con posibles mentores.

El énfasis en el “lenguaje de conversación que un adolescente realmente usaría” es importante. Muchas plantillas de lanzamiento comercial suenan artificiales cuando se entregan jóvenes fundadores. La autenticidad es más importante que la jerga corporativa.

Más allá de las indicaciones de chatgpt: estrategia de implementación

La diferencia entre los adolescentes que usan estas indicaciones de manera efectiva y aquellos que no se reducen a seguir. ChatGPT proporciona dirección, pero la acción crea resultados.

Los jóvenes empresarios más exitosos con los que trabajo usan estas indicaciones como puntos de partida, no de punto final. Toman las sugerencias generadas por IA e inmediatamente las prueban en el mundo real. Llaman a clientes potenciales, crean prototipos simples e iteran en función de los comentarios reales.

Investigaciones recientes de Junior Achievement muestran que el 69% de los adolescentes tienen ideas de negocios, pero se sienten inciertos sobre el proceso de partida, con el miedo a que el fracaso sea la principal preocupación para el 67% de los posibles empresarios adolescentes. Estas indicaciones abordan esa incertidumbre al desactivar los conceptos abstractos en los próximos pasos concretos.

La imagen más grande

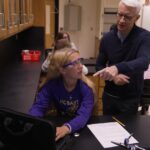

Los emprendedores adolescentes que utilizan herramientas de IA como ChatGPT representan un cambio en cómo está ocurriendo la educación empresarial. Según la investigación mundial de monitores empresariales, los jóvenes empresarios tienen 1,6 veces más probabilidades que los adultos de querer comenzar un negocio, y son particularmente activos en la tecnología, la alimentación y las bebidas, la moda y los sectores de entretenimiento. En lugar de esperar clases de emprendimiento formales o programas de MBA, estos jóvenes fundadores están accediendo a herramientas de pensamiento estratégico de inmediato.

Esta tendencia se alinea con cambios más amplios en la educación y la fuerza laboral. El Foro Económico Mundial identifica la creatividad, el pensamiento crítico y la resiliencia como las principales habilidades para 2025, la capacidad de las capacidades que el espíritu empresarial desarrolla naturalmente.

Programas como WIT brindan soporte estructurado para este viaje, pero las herramientas en sí mismas se están volviendo cada vez más accesibles. Un adolescente con acceso a Internet ahora puede acceder a recursos de planificación empresarial que anteriormente estaban disponibles solo para empresarios establecidos con presupuestos significativos.

La clave es usar estas herramientas cuidadosamente. ChatGPT puede acelerar el pensamiento y proporcionar marcos, pero no puede reemplazar el arduo trabajo de construir relaciones, crear productos y servir a los clientes. La mejor idea de negocio no es la más original, es la que resuelve un problema real para personas reales. Las herramientas de IA pueden ayudar a identificar esas oportunidades, pero solo la acción puede convertirlos en empresas que importan.

Noticias

Chatgpt vs. gemini: he probado ambos, y uno definitivamente es mejor

Published

8 meses agoon

5 junio, 2025

Precio

ChatGPT y Gemini tienen versiones gratuitas que limitan su acceso a características y modelos. Los planes premium para ambos también comienzan en alrededor de $ 20 por mes. Las características de chatbot, como investigaciones profundas, generación de imágenes y videos, búsqueda web y más, son similares en ChatGPT y Gemini. Sin embargo, los planes de Gemini pagados también incluyen el almacenamiento en la nube de Google Drive (a partir de 2TB) y un conjunto robusto de integraciones en las aplicaciones de Google Workspace.

Los niveles de más alta gama de ChatGPT y Gemini desbloquean el aumento de los límites de uso y algunas características únicas, pero el costo mensual prohibitivo de estos planes (como $ 200 para Chatgpt Pro o $ 250 para Gemini Ai Ultra) los pone fuera del alcance de la mayoría de las personas. Las características específicas del plan Pro de ChatGPT, como el modo O1 Pro que aprovecha el poder de cálculo adicional para preguntas particularmente complicadas, no son especialmente relevantes para el consumidor promedio, por lo que no sentirá que se está perdiendo. Sin embargo, es probable que desee las características que son exclusivas del plan Ai Ultra de Gemini, como la generación de videos VEO 3.

Ganador: Géminis

Plataformas

Puede acceder a ChatGPT y Gemini en la web o a través de aplicaciones móviles (Android e iOS). ChatGPT también tiene aplicaciones de escritorio (macOS y Windows) y una extensión oficial para Google Chrome. Gemini no tiene aplicaciones de escritorio dedicadas o una extensión de Chrome, aunque se integra directamente con el navegador.

(Crédito: OpenAI/PCMAG)

Chatgpt está disponible en otros lugares, Como a través de Siri. Como se mencionó, puede acceder a Gemini en las aplicaciones de Google, como el calendario, Documento, ConducirGmail, Mapas, Mantener, FotosSábanas, y Música de YouTube. Tanto los modelos de Chatgpt como Gemini también aparecen en sitios como la perplejidad. Sin embargo, obtiene la mayor cantidad de funciones de estos chatbots en sus aplicaciones y portales web dedicados.

Las interfaces de ambos chatbots son en gran medida consistentes en todas las plataformas. Son fáciles de usar y no lo abruman con opciones y alternar. ChatGPT tiene algunas configuraciones más para jugar, como la capacidad de ajustar su personalidad, mientras que la profunda interfaz de investigación de Gemini hace un mejor uso de los bienes inmuebles de pantalla.

Ganador: empate

Modelos de IA

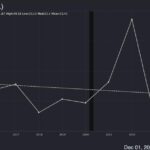

ChatGPT tiene dos series primarias de modelos, la serie 4 (su línea de conversación, insignia) y la Serie O (su compleja línea de razonamiento). Gemini ofrece de manera similar una serie Flash de uso general y una serie Pro para tareas más complicadas.

Los últimos modelos de Chatgpt son O3 y O4-Mini, y los últimos de Gemini son 2.5 Flash y 2.5 Pro. Fuera de la codificación o la resolución de una ecuación, pasará la mayor parte de su tiempo usando los modelos de la serie 4-Series y Flash. A continuación, puede ver cómo funcionan estos modelos en una variedad de tareas. Qué modelo es mejor depende realmente de lo que quieras hacer.

Ganador: empate

Búsqueda web

ChatGPT y Gemini pueden buscar información actualizada en la web con facilidad. Sin embargo, ChatGPT presenta mosaicos de artículos en la parte inferior de sus respuestas para una lectura adicional, tiene un excelente abastecimiento que facilita la vinculación de reclamos con evidencia, incluye imágenes en las respuestas cuando es relevante y, a menudo, proporciona más detalles en respuesta. Gemini no muestra nombres de fuente y títulos de artículos completos, e incluye mosaicos e imágenes de artículos solo cuando usa el modo AI de Google. El abastecimiento en este modo es aún menos robusto; Google relega las fuentes a los caretes que se pueden hacer clic que no resaltan las partes relevantes de su respuesta.

Como parte de sus experiencias de búsqueda en la web, ChatGPT y Gemini pueden ayudarlo a comprar. Si solicita consejos de compra, ambos presentan mosaicos haciendo clic en enlaces a los minoristas. Sin embargo, Gemini generalmente sugiere mejores productos y tiene una característica única en la que puede cargar una imagen tuya para probar digitalmente la ropa antes de comprar.

Ganador: chatgpt

Investigación profunda

ChatGPT y Gemini pueden generar informes que tienen docenas de páginas e incluyen más de 50 fuentes sobre cualquier tema. La mayor diferencia entre los dos se reduce al abastecimiento. Gemini a menudo cita más fuentes que CHATGPT, pero maneja el abastecimiento en informes de investigación profunda de la misma manera que lo hace en la búsqueda en modo AI, lo que significa caretas que se puede hacer clic sin destacados en el texto. Debido a que es más difícil conectar las afirmaciones en los informes de Géminis a fuentes reales, es más difícil creerles. El abastecimiento claro de ChatGPT con destacados en el texto es más fácil de confiar. Sin embargo, Gemini tiene algunas características de calidad de vida en ChatGPT, como la capacidad de exportar informes formateados correctamente a Google Docs con un solo clic. Su tono también es diferente. Los informes de ChatGPT se leen como publicaciones de foro elaboradas, mientras que los informes de Gemini se leen como documentos académicos.

Ganador: chatgpt

Generación de imágenes

La generación de imágenes de ChatGPT impresiona independientemente de lo que solicite, incluso las indicaciones complejas para paneles o diagramas cómicos. No es perfecto, pero los errores y la distorsión son mínimos. Gemini genera imágenes visualmente atractivas más rápido que ChatGPT, pero rutinariamente incluyen errores y distorsión notables. Con indicaciones complicadas, especialmente diagramas, Gemini produjo resultados sin sentido en las pruebas.

Arriba, puede ver cómo ChatGPT (primera diapositiva) y Géminis (segunda diapositiva) les fue con el siguiente mensaje: “Genere una imagen de un estudio de moda con una decoración simple y rústica que contrasta con el espacio más agradable. Incluya un sofá marrón y paredes de ladrillo”. La imagen de ChatGPT limita los problemas al detalle fino en las hojas de sus plantas y texto en su libro, mientras que la imagen de Gemini muestra problemas más notables en su tubo de cordón y lámpara.

Ganador: chatgpt

¡Obtenga nuestras mejores historias!

Toda la última tecnología, probada por nuestros expertos

Regístrese en el boletín de informes de laboratorio para recibir las últimas revisiones de productos de PCMAG, comprar asesoramiento e ideas.

Al hacer clic en Registrarme, confirma que tiene más de 16 años y acepta nuestros Términos de uso y Política de privacidad.

¡Gracias por registrarse!

Su suscripción ha sido confirmada. ¡Esté atento a su bandeja de entrada!

Generación de videos

La generación de videos de Gemini es la mejor de su clase, especialmente porque ChatGPT no puede igualar su capacidad para producir audio acompañante. Actualmente, Google bloquea el último modelo de generación de videos de Gemini, VEO 3, detrás del costoso plan AI Ultra, pero obtienes más videos realistas que con ChatGPT. Gemini también tiene otras características que ChatGPT no, como la herramienta Flow Filmmaker, que le permite extender los clips generados y el animador AI Whisk, que le permite animar imágenes fijas. Sin embargo, tenga en cuenta que incluso con VEO 3, aún necesita generar videos varias veces para obtener un gran resultado.

En el ejemplo anterior, solicité a ChatGPT y Gemini a mostrarme un solucionador de cubos de Rubik Rubik que resuelva un cubo. La persona en el video de Géminis se ve muy bien, y el audio acompañante es competente. Al final, hay una buena atención al detalle con el marco que se desplaza, simulando la detención de una grabación de selfies. Mientras tanto, Chatgpt luchó con su cubo, distorsionándolo en gran medida.

Ganador: Géminis

Procesamiento de archivos

Comprender los archivos es una fortaleza de ChatGPT y Gemini. Ya sea que desee que respondan preguntas sobre un manual, editen un currículum o le informen algo sobre una imagen, ninguno decepciona. Sin embargo, ChatGPT tiene la ventaja sobre Gemini, ya que ofrece un reconocimiento de imagen ligeramente mejor y respuestas más detalladas cuando pregunta sobre los archivos cargados. Ambos chatbots todavía a veces inventan citas de documentos proporcionados o malinterpretan las imágenes, así que asegúrese de verificar sus resultados.

Ganador: chatgpt

Escritura creativa

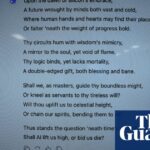

Chatgpt y Gemini pueden generar poemas, obras, historias y más competentes. CHATGPT, sin embargo, se destaca entre los dos debido a cuán únicas son sus respuestas y qué tan bien responde a las indicaciones. Las respuestas de Gemini pueden sentirse repetitivas si no calibra cuidadosamente sus solicitudes, y no siempre sigue todas las instrucciones a la carta.

En el ejemplo anterior, solicité ChatGPT (primera diapositiva) y Gemini (segunda diapositiva) con lo siguiente: “Sin hacer referencia a nada en su memoria o respuestas anteriores, quiero que me escriba un poema de verso gratuito. Preste atención especial a la capitalización, enjambment, ruptura de línea y puntuación. Dado que es un verso libre, no quiero un medidor familiar o un esquema de retiro de la rima, pero quiero que tenga un estilo de coohes. ChatGPT logró entregar lo que pedí en el aviso, y eso era distinto de las generaciones anteriores. Gemini tuvo problemas para generar un poema que incorporó cualquier cosa más allá de las comas y los períodos, y su poema anterior se lee de manera muy similar a un poema que generó antes.

Recomendado por nuestros editores

Ganador: chatgpt

Razonamiento complejo

Los modelos de razonamiento complejos de Chatgpt y Gemini pueden manejar preguntas de informática, matemáticas y física con facilidad, así como mostrar de manera competente su trabajo. En las pruebas, ChatGPT dio respuestas correctas un poco más a menudo que Gemini, pero su rendimiento es bastante similar. Ambos chatbots pueden y le darán respuestas incorrectas, por lo que verificar su trabajo aún es vital si está haciendo algo importante o tratando de aprender un concepto.

Ganador: chatgpt

Integración

ChatGPT no tiene integraciones significativas, mientras que las integraciones de Gemini son una característica definitoria. Ya sea que desee obtener ayuda para editar un ensayo en Google Docs, comparta una pestaña Chrome para hacer una pregunta, pruebe una nueva lista de reproducción de música de YouTube personalizada para su gusto o desbloquee ideas personales en Gmail, Gemini puede hacer todo y mucho más. Es difícil subestimar cuán integrales y poderosas son realmente las integraciones de Géminis.

Ganador: Géminis

Asistentes de IA

ChatGPT tiene GPT personalizados, y Gemini tiene gemas. Ambos son asistentes de IA personalizables. Tampoco es una gran actualización sobre hablar directamente con los chatbots, pero los GPT personalizados de terceros agregan una nueva funcionalidad, como el fácil acceso a Canva para editar imágenes generadas. Mientras tanto, terceros no pueden crear gemas, y no puedes compartirlas. Puede permitir que los GPT personalizados accedan a la información externa o tomen acciones externas, pero las GEM no tienen una funcionalidad similar.

Ganador: chatgpt

Contexto Windows y límites de uso

La ventana de contexto de ChatGPT sube a 128,000 tokens en sus planes de nivel superior, y todos los planes tienen límites de uso dinámicos basados en la carga del servidor. Géminis, por otro lado, tiene una ventana de contexto de 1,000,000 token. Google no está demasiado claro en los límites de uso exactos para Gemini, pero también son dinámicos dependiendo de la carga del servidor. Anecdóticamente, no pude alcanzar los límites de uso usando los planes pagados de Chatgpt o Gemini, pero es mucho más fácil hacerlo con los planes gratuitos.

Ganador: Géminis

Privacidad

La privacidad en Chatgpt y Gemini es una bolsa mixta. Ambos recopilan cantidades significativas de datos, incluidos todos sus chats, y usan esos datos para capacitar a sus modelos de IA de forma predeterminada. Sin embargo, ambos le dan la opción de apagar el entrenamiento. Google al menos no recopila y usa datos de Gemini para fines de capacitación en aplicaciones de espacio de trabajo, como Gmail, de forma predeterminada. ChatGPT y Gemini también prometen no vender sus datos o usarlos para la orientación de anuncios, pero Google y OpenAI tienen historias sórdidas cuando se trata de hacks, filtraciones y diversos fechorías digitales, por lo que recomiendo no compartir nada demasiado sensible.

Ganador: empate

Related posts

Trending

-

Startups2 años ago

Startups2 años agoRemove.bg: La Revolución en la Edición de Imágenes que Debes Conocer

-

Tutoriales2 años ago

Tutoriales2 años agoCómo Comenzar a Utilizar ChatGPT: Una Guía Completa para Principiantes

-

Startups2 años ago

Startups2 años agoStartups de IA en EE.UU. que han recaudado más de $100M en 2024

-

Startups2 años ago

Startups2 años agoDeepgram: Revolucionando el Reconocimiento de Voz con IA

-

Recursos2 años ago

Recursos2 años agoCómo Empezar con Popai.pro: Tu Espacio Personal de IA – Guía Completa, Instalación, Versiones y Precios

-

Recursos2 años ago

Recursos2 años agoPerplexity aplicado al Marketing Digital y Estrategias SEO

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial Aplicada de 4Geeks Academy 2024

-

Estudiar IA2 años ago

Estudiar IA2 años agoCurso de Inteligencia Artificial de UC Berkeley estratégico para negocios